Web Science/Part2: Emerging Web Properties/Emerging Structure of the Web

| Home | Part1: Foundations of the web | Part2: Emerging Web properties | Part3: Behavioral Models | Part4: Web & society | Participate | About the Web Science MOOC |

- PART1: Week1: Ethernet · Internet Protocol · Week2: Transmission Control Protocol · Domain Name System · Week3: Internet vs world wide web · HTTP · Week4: Web Content · Dynamic Web Content

- PART2: Week5: How big is the Web? · Descriptive Web Models · Week6: Advanced Statistic Models · Modelling Similarity · Week7: Generative Modelling of the Web · Graph theoretic Web Modelling

- PART3: Week8 : Investigating Meme Spreading · Herding Behaviour · Week9: Online Advertising · User Modelling

- PART4: Week10 : Copyright · Net neutrality · Week11: Internet governance · Privacy

|

6th week due Dec 2 nd

December 3rd[edit | edit source]The Human Factor[edit | edit source]Core Propositions of this Lesson[edit | edit source]

The lesson on Human Factor[edit | edit source]We have now learned a lot about the Web:

By learning all this content, do we now know what the Web is? No! Not at all! A painter requires tools, such as canvas and colors, to create a painting. Once you know which type of canvas and colors are available, you still do not know what paintings an artist can and will create. Likewise, we now know what tools people have to create the Web, but we have not looked yet at the Web that the people actually have been creating. Ok, let's look at the Web. But, how should we look at the Web? As a parent, we may want to look at the Web to understand how it can further or harm our children. As someone who wants to be entertained, we may want to look at the Web to find amusement. As someone who works with the Web, we may want to understand how the Web helps us to do our work. As Web scientists, we look at the Web as an object of scientific investigation, we want to look at the underlying regularities. Now, that we have learned about the technical regularities, we need to understand how the people who create the Web do this, what factors constrain them or further them, i.e. how people interact with the Web. As Web scientists, we do not only want to look, but we want to describe. In order to describe the Web and the people in the Web we need to model both. A core challenge that we will encounter and that we have to deal with is that we cannot determine or predict the exact behavior of humans. Human behavior that we observe on the Web is random to quite some extent. That does not mean that it is arbitrary. Human behavior is constrained by what humans can do in the Web. And while we need to come up with models of human behavior that include randomness, such models will still lead to insight of human behavior at large. Example questions that we will try to answer by this method of "looking" and "describing" (or modelling) will include:

Quiz[edit | edit source]

Randomness vs Regularities[edit | edit source]Core Propositions of this Lesson[edit | edit source]

The lesson on Randomness vs Regularities[edit | edit source]When we ask ourselves how can randomness lead to regularities, we may best look at an analogy. You may know the following example from your physics classes in high school:

If we'd assume that links in the Web would be forged in a completely random manner - just like the molecules spread arbitrarily between the two boxes - then the probability of linking from one particular Web page to another particular one should be the same for all pairs. As we will see later, however, this is not the case. Why not? Indeed we can model each person acting in the Web, i.e. surfing, creating Web pages, creating links between Web pages, in a behavioristic manner as a random process. We can model each human agent as picking randomly from a set of choices over time. However, when we model all actors in the Web, we must model carefully enough such that the probability distributions we observe empirically actually match what we derive from our theoretical model. What does it mean to "model carefully enough"? In our behavioristic random model of human behavior, we must take into consideration that beyond sheer random behavior

How can such models help us in understanding and forming the Web? Let me give another analogy:

Likewise in the Web, we may find that there may be regularities within or between Web sites that conform badly or well to the behavior of its users and may determine the success of such a site and of a Web ecosystem. Quite often it is impossible to completely predict the emerging behavior of users, but trying to support it may lead to better systems [Later example: Cattuto and others observed that there is statistically significant information contained in the ordering of tags in delicious. This result is far from being self-evident. Folksonomy systems like bibsonomy do not represent such order, therefore they lack the capability to support the user] Let us now first talk about what we mean with a "model" and after that look at three example models of user behavior on the Web. Task[edit | edit source]One metaphor describes the Web as the largest library of our all times. Discuss which structures a library has and which of these structures do also exist in or for the Web (300 words) and which structures are available now that might not have been part of a traditional library. Quiz[edit | edit source]

Distinguishing Descriptive and Predictive Models[edit | edit source]Core Propositions of this Lesson[edit | edit source]

The lesson on Descriptive and Predictive Models[edit | edit source]Creating models in such different disciplines as physics, psychology or Web science is an art. The objective is to connect a phenomenon that occurs first and another phenomenon that follows later. The first phenomenon is usually called the independent variable and what follows is called the dependent variable. This naming applies that the following phenomenon is somehow derived or correlated with what was provided as an input. A connection between independent and dependent variables should either allow for making some predictions into the future (if possible), i.e. it should constitute a predictive model; or it should explain the causality of some situation, i.e. it should constitute a descriptive model. An example for a predictive model is given by HCI research that finds that people from different cultural backgrounds do not only prefer different styles of user interfaces, but they even work work more effectively with different styles of user interfaces (cf. [Katharina Reinecke, Abraham Bernstein: Improving performance, perceived usability, and aesthetics with culturally adaptive user interfaces. ACM Trans. Comput.-Hum. Interact. 18(2): 8 (2011)]. Finding this connection is very intriguing, even though it does not tell us why the independent variable, i.e. the user interface style, and the dependent variable, the working effectiveness, are connected. It allows us to predict the future, because if we know the style and the background of the user we can predict with some good probability that his effectiveness of working will be higher or lower than in other circumstances. An example for a descriptive model is given by the [double pendulum] where we exactly understand the physics of the mechanical movements and we can describe them with mathematical formulas. We can describe how the independent variables, i.e. location and velocity of the pendulum as well as gravity at this location, influence the dependent variables, i.e. subsequent locations and velocities of the pendulum, still we cannot predict the position of the double pendulum 10 seconds from now. Furthermore, we may have descriptive models with explanatory power that also allow us to make predictions into the future. For instance, Newton's laws explain the connection between gravity and velocity and allow us to predict how an apple falls onto the earth. In Web Science we are interested in these different types of models to improve our understanding of the situations we encounter on the Web, to predict what may happen in the (near) future, but also to understand the cases where our models exhibit some behavior where we will not be able to make meaningful predictions into the future - as it is the case for the double pendulum. Further Reading[edit | edit source][New Evidence for the Theory of the Stork] Quiz[edit | edit source]

A Model for Link Generation (Erdős–Rényi)[edit | edit source]Core Propositions of this Lesson[edit | edit source]

Lesson on a Model for Link Generation (Erdős–Rényi)[edit | edit source]Let us look at our first example of a descriptive model in Web science. The problem being given here is to predict how authors of Web content create links between Web pages over time. In general, the process of creating links is very complex. An author may:

And the author may iterate through this procedure and work in a myriad of other ways when creating a link. When we want to come up with a model that tries to predict where the author will set a link, we cannot target all the myriad of steps that the author may take. In fact, it would even be methodologically unsound to do so. To have a good model, the model should be

If we cannot test the model, we will never know whether it is any good. If it comes with many assumptions it will only be applicable in very few situations. And, if it is very complex, it may describe the current set of observations, but it is unlikely to generalize to other situations, i.e. it will have less explanatory power. In order to come up with a model that we can analyze, we hence need to make simplifications:

Now, each model has dependent and independent variables and a way how the two are related. In the model of preferential attachment, links are added over time. I.e. the link that is newly created is a variable that depends on what other documents and links existed before, i.e. the independent variable ("independent" in the sense that they are not captured by the model; if the model was a program function, we would call the independent variables the parameter of this function and the dependent variables the output of this function). But, hey, didn't we say that it was difficult to observe the Web over time. While it is (relatively) ease to make a snapshot of a sizable part of the Web, it is multiple times more difficult to know what the Web looked liked at different points in time in the past. Well, we can still come up with a meaningful *descriptive model* if we do not target the prediction of new specific links, but only a description of how many links exist between different classes of Web pages. Then, our model represents Web pages at all different ages and if the model is correct, it explains the structure of them all. Let's summarize at this point:

Thus, what remains to be done is:

Let us start with probably the simplest model of all where we just assume that we have n many web pages (each with a unique URL) and k many links between these pages, and k is less or equal than n*n. And, we can come up with a simple notation that says that each Web page is described by a number i or j between 1 and n and each link is described by a pair of numbers (i,j) where the pair means that Web page i links to Web page j. The model could foresee that the next link that is created is drawn randomly from all links that do not exist yet with the same probability, i.e. the probability that a link (i,j) that does not exist yet is created is 1/(n*n-k). This model is also known as the Erdős–Rényi model and many of its properties are described in the corresponding Wikipedia article. Pseudo code for this model may look like the following: Input: k number of nodes, l number of edges to be created

1 Initialize Int[][] edge to 0 in all entries indicating that there is no edge

2 FOR i=1 to l

3 n=random(1,k)

4 m=random(1,k)

5 IF (n=m) or edge(n,m)=1

6 THEN

7 i--

8 ELSE

9 edge(n,m)=1

10 FI

11 ROF

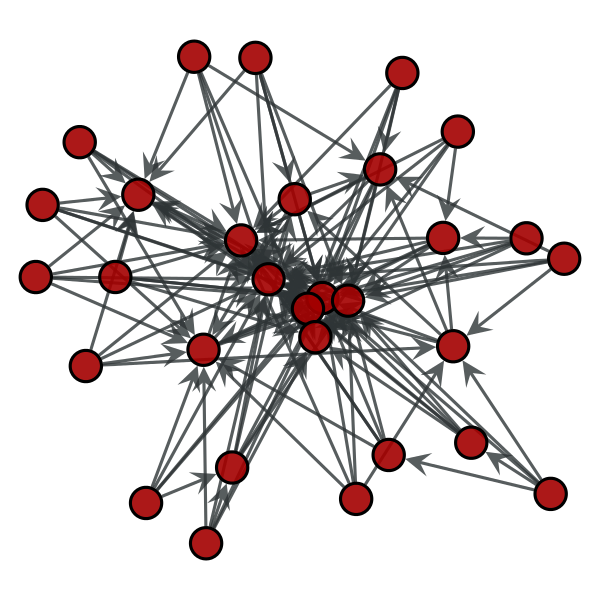

An example structure created looks like: Thus, we now have our first model for describing link generation. What we still do not know is whether this model is a good one. This will be the topic of our next lesson. Quiz[edit | edit source]

Statistical Distributions for the Description of System Properties[edit | edit source]Core Propositions of this Lesson[edit | edit source]

Lesson on Statistical Distributions for the Description of System Properties[edit | edit source]How can we now test whether the Erdős–Rényi model is a good model for our problem? If Erdős–Rényi was a predictive model, we would apply a Cranfield-like (also called "gold standard-based evaluation") test setting: In such an evaluation setting a model is applied to a test dataset for which the correct answers are known (e.g. because human annotators have defined what are correct and incorrect answers or because we have historical data for which the outcome is known). Then one can check how often a prediction is correct and correspondingly derive different measures such as accuracy, precision and recall (and many more). Thus, one may evaluate the validity of a predictive model. In fact, in our lecture on Web Information Retrieval and Machine Learning and Data Mining, such Cranfield-like test settings are the core vehicle to compare the effectiveness of different models. In the case of the the Erdős–Rényi model (and in the case of many other, specifically, probabilistic descriptive models) we find however that

What we may still do is to test whether we observe statistical distributions in the real world that we also find in our model. This is called Statistical Hypothesis Testing. Let us look at a classical distribution in networks, namely the *In-degree distribution*. The in-degree distribution is given by counting how many nodes (here each node is a Web page) have 0 links pointing to them, how many nodes have 1 link pointing to them, 2 links, 3 links and so forth. The result can be given in a table like this

or in a histrogram like this: The idea behind statistical hypothesis testing in our setting is now that given

one compares the two distributions. This very idea is also depicted in the following figure. Let us consider the meaning of the 4 ovals in detail:

Quiz[edit | edit source]

0*0+1*1+2*4+3*3+4*6+5*8+6*4+7*5+8*2+9*3+10*0 = 1+8+9+24+40+24+35+16+27 = 184 edges Different Diagramme Types for Statistical Distributions[edit | edit source]Core Proposition of this Lesson[edit | edit source]

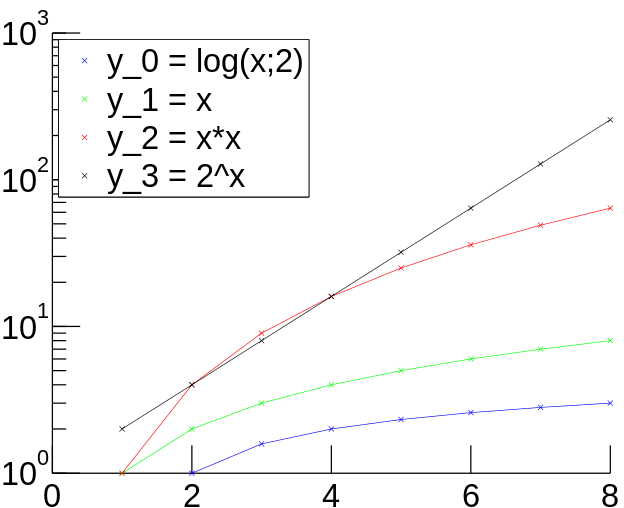

Lesson on Different Diagramme Types for Probability Distributions[edit | edit source]We have already seen the simplest diagramme type, i.e. a histogramme of how many nodes with a specific in-degree exists given observations in the real world and in the a model. In such a diagramme we may depict absolute frequences, however, if we normalize such that all the different possibilities add up to probability one, we have to normalize our absolute counts by dividing each number by the overall number of events observed. No matter whether we compare absolute numbers or relative numbers, it frequently remains tricky to visually compare the different distributions. In the following you will see different (absolute) plots of different standard functions, ranging from logarithmic y=log(x;2), over linear y=x, over y=x*x quadratic function to exponential function y=2^x, and their inverses. A simple trick facilitates such comparisons: If we want to distinguish exponential functions from others, we can use a log-plot diagram. For any exponential function it holds that y=b^x, where b is the basis. If we plot log y instead of y, we effectively plot (log y)=log (b^x)=x*log(b), i.e. every exponential function appears as a straight line in a log-plot, while all other type of functions do not appear as such. The steepness of the function, i.e. the basis, is rendered by the steepness of (log b) of the straight line. Compare our functions from above in the following two log-plots:

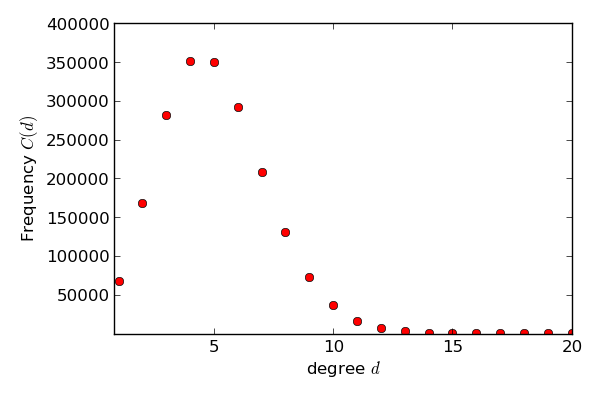

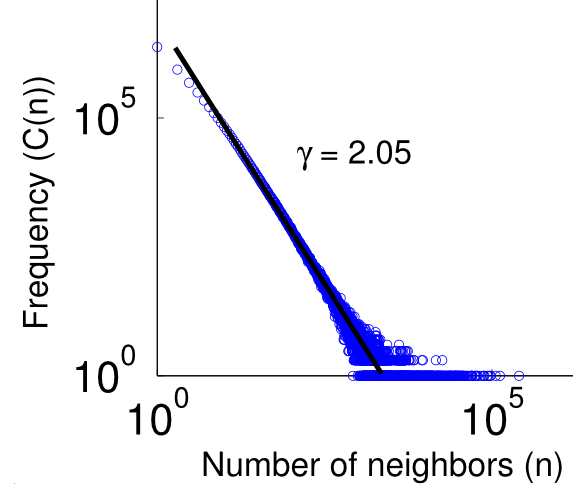

If we want to distinguish polynoms (or inverse polynoms), we can use a log-log-plot. For any polynom it holds: y=x^k. In a log-log-plot, we change the value we depict in the direction of the y-axis, but in addition we also change the plotting of values on the x-axis. This implies that instead of a pair of values (z,z^k) we plot the pair (log z, log (z^k))=(log z, k*log (z)). If we want to compute the value in y-direction for a given x=log z, we plot the value pair (x,y)=(log z, k*log(z))=(x,k*x). This means that in a log-log-plot diagram all polynoms (including linear functions) are depicted as straight lines, while other functions, such as exponentials or logarithms, are not straight lines. Compare our functions from above in the following two log-log-plots: We see now that be visual inspection alone we can already determine several interesting functions and their coefficients. Example[edit | edit source]Have a look at the following two graphs. This graph consists of 30 nodes and is a Random graph as described above. This graph also consists of 30 nodes. But it follows the rules of preferential attachment. Can you spot the difference? Let us assume we had a much bigger random graph with 2mio nodes. Drawing the graph is pointless but we can still plot the frequencies of the node degree. We might get the following picture: But is this what the web looks like? Let us look at the english wikipedia and take all articles as nodes and links between articles as edges and plot the degree distribution. We obtain the following picture: We see that the graphics don't look alike. But maybe that is due to the face that we used a log log scale so let us plot the random graph distribution on a log log scale: Still the graphs don't look alike. So we can all ready see that the web is not just a random graph but contains more structure. Let us have a look at the plot of graph that is generated using preferential attachment: The Graph is generated with 2 mio. nodes. Each node has 70 outedges. The generation model is preferential attachment. It has been plotted on a log log scale. It looks much more a like the distribution of our wikipedia network. Hence, what we observe about in-degree distributions of Web pages is that they follow a power law distribution, while Erdős–Rényi produces a Poisson distribution. Quiz[edit | edit source]

Lesson on Some Useful Statistical Distributions and Quality Measures[edit | edit source]The audience may note that "looking the same" is not a mathematical proposition. In statistics mathematicians have created machinery in order to answer the following question: Given

how probable is it that the empirical observation was produced by the kind of dice of the model? Instead of rolling a dice, we may have a more complicated model and the empirical model may be more difficult, such as in our comparison of empirically observed in-degree distributions and in-degree distributions produced by a model, e.g. the Erdős–Rényi model. While it would be nice to go now into all the details of this comparison, this would require more time than we can have in this introduction to Web science. Those interested: more content in this direction will be given in the lecture on "Data Science" that will be given in the summer term 2014. The outcome of such a consideration will be the result that: it is highly unlikely that the Erdős–Rényi model produces some in-degree distribution as it is observed in the Web. Thus, in the next learning unit will we look for another model to describe link generation in the Web. Further Reading: Statistical Tools[edit | edit source]In our lecture on [Data Science] we will go into more details of computing statistics and determining the quality of a probabilistic model. In particular, we will look at a whole set of distributions:

And we will look at quality measures such as:

December 5th[edit | edit source]Let us summarize what we have achieved so far in this part:

In fact, we can draw a picture like the following: The picture elaborates on what we have already used implicitly:

More specifically, we have in our Web science model now abstracted quite a lot, but then came up with ideas of how we can build and validate models:

What we have not seen in our models yet, is the mimicking of other behavior, as this was not part of the Erdős–Rényi model. In fact, we will see next that this is exactly what has been missing from the Erdős–Rényi model. Preferential Attachment as an Improved Model for Link Generation[edit | edit source]Core Propositions of this Lesson[edit | edit source]

Lesson on Preferential Attachment as an Improved Model for Link Generation[edit | edit source]The core idea of preferential attachment is that popular nodes attract more links that less popular nodes, i.e.:

Let us consider the issue of Web pages. Given a Web page, this is a node n, we formulate that the probability that the next link that is created points at this node n is proportional to the number of links that already point to n, i.e. the in-degree(n): The original model of preferential attachment by Barabási and Albert only considers new nodes that join the network. To make it more easily comparable against the Erdős–Rényi model, we adopt their idea (like others have done) and say that the probability that a link is created from a node n to a node m, i.e. P(n,m), is proportional to each of them being selected: We need a slight adaptation, because the probability of an unlinked node to be linked should not be zero, hence let us assume that a revised probability for selecting one node was: Considering that P(n) must be a probability distribution we need to have a normalization factor Z such that

Int Pick()

Helper functions: in-degree(n), Z() as defined above; random(1,z) returns an integer between 1 and z

1 n=1, c=0

2 z=Z()

3 t=random(1,z)

4 WHILE c<z DO

5 { c=c+in-degree(n)+1

6 n++

7 }

8 RETURN n

PrefAttachment(k,l)

Input: k number of nodes, l number of edges to be created

1 Initialize Int[][] edge to 0 in all entries indicating that there is no edge

2 FOR i=1 to l

3 { n=Pick()

4 m=Pick()

5 IF (n=m) or edge(n,m)=1

6 { i-- }

7 ELSE

8 { edge(n,m)=1 }

9 }

References[edit | edit source]Albert-László Barabási, Réka Albert. Emergence of Scaling in Random Networks. In: Science, 286 no. 5439 pp. 509-512, DOI: 10.1126/science.286.5439.509. Also available as preprint from http://arxiv.org/abs/cond-mat/9910332 Quizzes[edit | edit source]

Critical Assessment of Preferential Attachment[edit | edit source]Learning Objectives[edit | edit source]

Lesson on Critical Assessment of Preferential Attachment[edit | edit source]We have now seen two models for explaining link creation in the Web: Erdős–Rényi and preferential attachment. While the latter seems to be a better explanation for what is going on there are indeed questions that arise that have to do both with the mathematical background as well as with the emergence of power laws in many link creation scenarios, not only in the Web, but e.g. in citation networks (scientific papers cite older scientific papers; nodes, i.e. new papers, are only added but never destroyed; links, i.e. citation edges, are only added but never destroyed):

Hence, what we can observe here is a fundamental critique of the model that considers assumptions such as

Some of that critique has been nicely formulated in by Hans Akkermans leading to further research and insights - more than we can handle in this lecture. Beyond, more criteria for critique may be uttered (Also by Akkermans). References[edit | edit source]

Word/Tag production models[edit | edit source]Learning Objectives[edit | edit source]

Lesson on Word/Tag production models[edit | edit source]

Preferential Attachment OR Word Production (Polya Urn Model)[edit | edit source]There are n balls with different colors in an urn In each step: Randomly draw a ball Put it back together with a second ball of the same color Fixed number of colors Colors are distributed according to a power law Linear Preferential Attachment OR Word Production with Growing Vocabulary (Simon Model)[edit | edit source]Like the Polya Urn Model. Additionally in each step: Instead of drawing a ball, insert with low probability p a ball with a new color Linear increasing number of colors Colors are distributed according to a power law

Further Readings about the Family of Poly Urn Models[edit | edit source]Read more about the Polya Urn Models (or Polya Processes) at [1] The Web as a Medium for Social Capital[edit | edit source]We now have (extremely simplistic) models of the Web that describe how content is formed and how links are created - and this is the core of Web content in the Web 1.0: Text and hyperlinks. While we can think about how to extend our models to more sophisticated content (media documents, dynamic content), and to some very, very small extent this is done in the literature (much more should be possible), we should sometimes also take a high-level view and consider how people decide to interact with a particular piece of Web content. Considering that Web content is produced by people and for people, it makes sense to consider the interaction of people via the Web also in sociological terms. While many disciplines have thought about how a medium is used to socially interact e.g. philosophy (language games, Wittgenstein), artificial life (language games, Luc Steels et al), linguistics, media sciences, anthropology, etc, we have now selected a theory that has its roots in sociology and management science, i.e. the notion of social capital. In Wikipedia it is stated that "In sociology, social capital is the expected collective or economic benefits derived from the preferential treatment and cooperation between individuals and groups." In the Web we have a knowledge system consisting of documents and links (I abstract here from important dimensions like transactions) and in this knowledge system, actors navigate, interact, grow the knowledge network, and parts of the knowledge network are more successful than others. Social capital is distinguished by Nahapietund Ghoshal(1998; http://www.jstor.org/stable/259373) into

This can be easily carried over to the Web, where a Web page

Effectively, it is of course the person (or the institution) that has such higher social capital, but the Web page (or Web site) is the medium that carries the content and that leads to the increased benefit for the social actor. We can now try to determine:

Cognitive Social Capital: The Example of Measuring How Relevant a Term is for Representing a Document[edit | edit source]Cognitive social capital captures to which extent the expertise to use vocabulary, narratives and other cognitive "tools" in a social group constitutes capital for the individual, because he/she is better able to communicate and share ideas When is a word important or relevant? Or: How important is a word for representing a document? First try: Words that occur most often are most important: the frequency of term (word) in document Problem: What are the most important words now?

According to this account they would be most important, because they are most frequent. So, should we rather go for the least frequent words, this would mean the ad-hoc invented words would be most useful - this cannot be true, because no common speaker of English (and English only) understands a word like "grlp". Obviously neither the most frequent nor the least frequent words are helpful in grouping (the technical term is "clustering") documents of a kind together and separating documents of a kind from documents of other kind. A word that helps to do exactly this should obviously not appear in all documents, but rather in a large, but not too large set of documents. Hence, one arrive at the idea of the inverse frequency of how often a term appears in a set of Documents The combination of the two is then a balanced account between the two. In fact, there is not one formula striking such a balance, but a family of formulas, the most common of which is the following:

where N is the number of documents

References[edit | edit source]S. Robertson and H. Zaragoza. The Probabilistic Relevance Framework: BM25 and Beyond. Foundations and Trends in Information Retrieval. Vol. 3, No. 4 (2009) 333–389, 2009, DOI: 10.1561/1500000019 [2] Relational Social Capital: The Example of Meme Spreading[edit | edit source]What is a meme? Let's look back at what is a gene and what is its basic mechanism:

A meme is an idea, behavior, or style that spreads from person to person within a culture (cf. Meme in Wikipedia). In order for an idea to spread it needs some replication mechanism. People spread ideas in a culture by different ways, e.g. through gossip, or because people want to warn each other. The individual reason for replication may be selfish, it may be altruistic, etc. Like a gene, replication need not be perfect, and in fact copying mistakes occur. Hoaxes are memes that spread via email or in social networks, because people believe, e.g., the warning that is in a hoax and want to warn their friends, too, and with sending the hoax to everyone the hoax keeps alive frequently over many years - or it reappears in a slightly different shape after some years. The investigation of hoaxes on the Web is an interesting topic (I cannot find the paper about it anymore --SteffenStaab (discuss • contribs) 16:28, 4 December 2013 (UTC)) In twitter, hashtags have been invented by users in order to mark specific and often complicated keywords in their messages. Quite frequently now hashtags are considered to be simple memes and people investigate models that explain how such memes spread in a network like Twitter (people imitating other people's behavior). For this you need to know the basic functions of microblogging platforms like Twitter. Core are:

There are now several ways in which information diffusion may happen in the network. Retweeting is one. Another one is that messages contain hashtags, but people decide not to retweet the whole message, but just to reuse the hashtag and spread a somewhat different message. The interesting point in analysing either is that one achieves a picture of what content is deemed interesting by actual users (Note there are also many bots active in twitter that fake the presence of humans for such tasks as spamming, increasing the number of followers, etc.). Looking back at our original objective of this learning unit: we want to understand relational social capital. That is we want to understand the judgement that account holders exert in order to decide whether to disseminate information or now. Such judgement may be based on various criteria, such as spreading most trustworthy information, spreading most interesting information, spreading information for selfish reasons (e.g. making yourself look good), etc. etc. While all these are very hard to judge, what Weng et al suggest with their study is that:

"Economy of attention" was first theoretized by H. Simon, nobel prize winner, economist and computer scientist: He came up with the notion of "satisficing reasoning", i.e. humans do not aggregate all available evidence, but they tend to focus on one or two criteria and make a choice based on the perceived value according to these criteria, e.g. when buying products people tend not to value all features of a product, but just one or two most salient ones. USE FIGURE 1 FROM (L. Weng, A. Flammini, A. Vespignani and F. Menczer) IF POSSIBLE. Relational Social Capital: A Model for Memes Spreading (Information Diffusion)[edit | edit source]The breadth of attention of a user[edit | edit source]Shannon's entropy measures how man bits are needed in order to write down all the events that are possible in an optimal encoding. To formulate it in very different words, the same entropy measures how much uncertainty is involved when observing a distribution of events. If there is a lot of uncertainty the amount of information needed to reflect this is high. If there is little uncertainty, one needs only very little information to represent the distribution of events. The measure considers the probability of an event or a piece of information to happen:

This formula reflects that events that occur all the time, i.e. their probability is close to 1, do not contribute much to entropy because of . Likewise, events that occur only rarely, i.e. their probability is close to 0, do not contribute much to entropy. Hence, if two events are possible (e.g. throwing a coin with Head and Tail), but Head occurs with probability 1 and Tail with probability 0, the resulting entropy in this system is 0, reflecting the fact that their is no uncertainty involved. If the two events occur with uniform distribution, i.e. p(Head)=p(Tail)=0.5 then entropy is maximized, S=1. I.e. one needs one full bit to represent whether the one or the other event has happened. This may be generalized to n events. n events with uniform distribution have entropy . When we are now interested in a set of events, such as memes, where each meme is a hashtag and the probability that the user generates a meme is approximated by counting (and normalizing) how often he has used a particular hashtag, we may define the breadth of attention of a user by the same formula for entropy. User Interests[edit | edit source]The interests of a user can be represented by the activities that she has engaged in. If there are k memes that have been tweeted so far and user u has tweeted or retweeted l<k of them, we can represent this by:

ADD EXAMPLE Likewise, we can model a tweet by the set of memes (i.e. hashtags) it includes:

Similarity[edit | edit source]Cosine References[edit | edit source]L. Weng, A. Flammini, A. Vespignani and F. Menczer. Competition among memes in a world with limited attention. In: Sci. Rep., 2(335), 2012. http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3315179/ Structural Social Capital: The Example of Random Surfer Model - The Generative Model[edit | edit source]Learning Objectives[edit | edit source]

Lesson on Random Surfer[edit | edit source]When we consider the behavior of a person surfing the Web, the person has a number of choices to make, namely he may:

And maybe you can even come up with some more choices. Structural Capital: The Example of Random Surfer Model - The Analytical Model[edit | edit source]Learning Objectives[edit | edit source]

Lesson on Random Surfer - The Analytical Model[edit | edit source]Represent transition probabilities as a matrix This is an ergodic Markoff system The solution is given by First eigenvalue of the corresponding equation The corresponding eigenvector gives the stationary probabilities of the surfer.

Further Thoughts on Research Needed[edit | edit source]Most research about Web structure focuses on static HTML pages and folksonomies (sometimes with time stamps of data). There is very little research on Web structure analysis looking at dynamic content. The reason is that there are very few people who understand both dynamic Web content and Web structure analysis and models. If you can think about some nice hypothesis, this could lead to intriguing research. |

The structures described up to here have started to arise in the very early Web; very many structures however came into existence in order to be found. Thus, understanding the search engine eco system is important to understand the Web as it is now.

The following video of the flipped classroom associated with this topic are available:

You can find more information on wiki commons and also directly download this file

You can find more information on wiki commons and also directly download this file

![{\displaystyle I_{u}:=[...\sigma _{i}...],{\text{ where }}\sigma _{i}=1{\text{ if }}u{\text{ has retweeted that meme in the past and 0 otherwise }}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/530dd23799a52b3085f0045fb3f2f88d5c6f3293)

![{\displaystyle T_{n}:=[...\sigma _{i,n}...],{\text{ where }}\sigma _{i}=1{\text{ if }}T{\text{ contains the meme and 0 otherwise }}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c25a9b30b340f8dcf85b468f9bc1f2ee56dc66fa)