Limit (mathematics)

| Subject classification: this is a mathematics resource. |

| Educational level: this is a secondary education resource. |

| Educational level: this is a tertiary (university) resource. |

The concept of the limit is the cornerstone of calculus, analysis, and topology. For starters, the limit of a function at a point is, intuitively, the value that the function "approaches" as its argument "approaches" that point. That idea needs to be refined carefully to get a satisfactory definition.

Limit of a Function

[edit | edit source]Here is an example. Suppose a function is

What is the limit of f(x) as x approaches 3? This could be written

We can't just evaluate f(3), because the numerator and denominator are both zero. But there's a trick here—we can divide (x-3) into both numerator and denominator, getting

so the limit is 5/4. (That does't actually prove that the limit is 5/4. Once we have defined the limit correctly, we will need a few theorems about limits and continuous functions to establish this result. It is nevertheless true.)

Now try an example that isn't trivial. Let

This function is well-defined for x>0, using the general definition that involves the exponential and natural logarithm functions. We can calculate f(x) for various values:

f(5) = 3125 f(2) = 4 f(0.5) = 0.7071 f(0.3) = 0.6968 f(0.2) = 0.7248

It got smaller, but now it's getting bigger. What's happening?

f(0.1) = 0.7943 f(0.01) = 0.95499 f(0.0001) = 0.999079 f(0.000001) = 0.99998618

It looks as though it's approaching 1. Is it? And what does that mean?

f(1 trillionth) = 0.999999999972369

Remember that f(0) doesn't exist. So we really have to be careful.

We are going to say that the limit of f(x), as x approaches 0, is 1. What that means is this:

We can get f(x) arbitrarily close to 1 if we choose an x sufficiently close to zero. If we want f(x) within one quadrillionth of 1, will do. We never have to set x to zero exactly, and we never have to get f(x) = 1 exactly.

In the general case, for arbitrary functions, we might want to say something like

For Every Epsilon ...

[edit | edit source]Stated precisely, given any tolerance ε (by tradition, the letter ε is always used), x being sufficiently close to X will get f(x) within ε of Y. That condition is formally written:

In this example (, X=0, Y=1), when ε is (one quadrillionth), satisfies the condition.

... There Exists a Delta

[edit | edit source]The way we formalize the notion of x being very close to X is:

- "There is a number δ (by tradition it's always δ) such that, whenever x is within δ of X, f(x) is within ε of Y".

That is written:

- Whenever

In our example, if ε is , δ = works. That is, any will guarantee .

The Full Definition

[edit | edit source]So here is the full definition:

- means

- For every ε > 0, there is a δ > 0 such that, whenever

So the definition is sort of like a bet or a contract—"For any epsilon you can give me, I can come up with a delta."

A few things to note:

- We require ε > 0. Specifying a required tolerance of zero is not allowed. We only have to be able to get f(x) within an arbitrarily close but nonzero tolerance of Y. We don't ever have to get it exactly equal to Y.

- We have 0 < |x-X| < δ, not just |x-X| < δ. That is, we never have to calculate f(X) exactly. f(X) doesn't need to be defined. In the example we are considering, isn't defined.

This definition, and variations of it, are the central point of calculus, analysis, and topology. The phrase "For every ε there is a δ" is ingrained into the consciousness of every mathematics student. This notion of a "bet" could be considered to set the branches of mathematics that follow (calculus, topology, ...) apart from the earlier branches (arithmetic, algebra, geometry, ...) Students who have mastered the notion of "For every ε there is a δ" are ready for higher mathematics.

In our example of , we haven't actually satisfied the definition of the limit, because f(x) isn't defined for negative x. There are more restrictive notions of "limit from the left" and "limit from the right". We have the limit from the right of , which means

- For every ε > 0, there is a δ > 0 such that, whenever 0 < x-X < δ, |f(x)-Y| < ε

We still haven't proved that the limit is actually 1. We just gave some accurate calculations strongly suggesting that it is. In fact it is, and the proof requires a few theorems about limits, continuous functions, and the exponential and natural logarithm functions.

The Limit of f(x) is Infinity

[edit | edit source]With this precise (or, as mathematicians say, rigorous) definition of a limit, we can examine variations that involve "infinity". Remember, Infinity is not a number. It is only through the magic of the epsilon-delta formulation that we can make sense of it.

We might say something like "The limit of f(x), as x approaches X, is infinity." What that means is that we replace the

with

That is,

- For every M > 0, there is a δ > 0 such that, whenever

Consider the function

If you graph this, there is an "infinitely high" peak at x=3. We have:

If we set , will win the bet.

For a limit of minus infinity, we set M to some huge negative number, so

means

- For every M < 0, there is a δ > 0 such that, whenever

The limit of f(x) as x Goes to Infinity

[edit | edit source]Infinity can also make an appearance in the function's domain, as in "The limit of f(x), as x approaches infinity, is 3." What that means is that we replace the

with

That is,

- For every ε > 0, there is an M such that, whenever

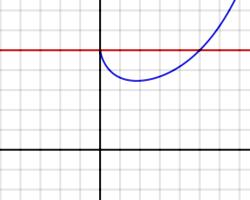

Consider the function

If you graph this, it goes off to a value of 3 toward the right. We have:

If we set , will win the bet.

We can do a similar thing for the limit as x goes to minus infinity.

The limit of f(x) as x Goes to Infinity is Infinity

[edit | edit source]A function like combines both inifinites. We write

Here we replace both δ and ε:

- For every N > 0, there is an M such that, whenever

Limit of a Sequence

[edit | edit source]An important type of limit is the limit of a sequence of numbers . When we say that this sequence has a limit A, we are essentially using the definition above for x going to infinity, but using n instead of x:

means that

- For every ε > 0, there is an M such that, whenever

When a sequence has a limit like that, we say that the sequence converges to that limit.

Infinite Sums

[edit | edit source]It is important to distinguish between the limit of the sequence, as defined above, and the much more commonly used limit of the partial sums of the sequence. If we have a sequence , we also have a sequence of "partial sums", the nth item of which is the sum of the first n items in the original sequence:

If converges to A, we say that the infinite sum converges to A, written like this:

For example, the sequence

(where the exclamation point is the factorial operation) converges to the number known as "e":

This is the only sense in which a summation going to infinity is meaningful.