Psycholinguistics/Models of Speech Production

Models of Speech Production

[edit | edit source]Despite vast amounts of research, so far there is no single, all-inclusive model of speech production. This is largely in part to our limited accessibility to the process of speech production, as it occurs almost entirely without our conscious awareness; you could not explain to someone the steps you took to turn a thought or a feeling into words. It is further complicated by the fact that we are able to produce words at rates as high as 3 words per second (~180 words per minute), while producing less than 1 speech error for every thousand words spoken. Though the speech errors that we do produce provide valuable information about the process, they are relatively rare and difficult to induce experimentally.

There is an undeniable breadth of factors that are known to implicate speech production, many of which are not incorporated into most models. Emotion, for example, can modulate word production [1]. During letter searching in image-naming task, participants had longer reaction times when presented with emotionally charged images compared to neutral images. Situational anxiety has also been shown to affect the speech production process by increasing the frequency of speech errors, such as Freudian slips, as well as pausing mid-sentence [2]. In Subjects who were asked to speak about anxiety provoking topics. It is thought that in emotionally charged situations, or situations that cause anxiety, speakers have a harder time accessing the “right” words to accurately express how they are feeling. These two factors, emotion and anxiety, as well as non-verbal components of language or non-verbal languages (i.e. ASL) and second language speech production of are not encompassed within traditional models of speech production.

There is no model or set of models that can definitively characterize the production of speech as being entirely holistic (processing a whole phrase at time) or componential (processing components of a phrase separately). Despite their differences however, all models seem to have some common features. Firstly, the main question behind all models concerns how linguistic components are retrieved and assembled during continuous speech. Secondly, the models all agree that linguistic information is represented by distinctive units and on a hierarchy of levels (i.e. distinctive features like voicings, phonemes, morphemes, syllables, words and phrases etc.) and that the order in which these units are retrieved is sequential as they build upon one another [2]. Thirdly, it seems that all models agree that you would need to access semantics and syntax prior to the phonology of an utterance, as the former dictate the latter and thus, all models share in common the following stages and substages in this order:

1) Conceptualization: deciding upon the message to be conveyed

2) Sentence formation:

a. Lexicalization: selecting the appropriate words to convey the message

b. Syntactic structuring: selecting the appropriate order and grammatical rules that govern the selected words

3) Articulation: executing the motor movements necessary to properly produce the sounds structure of the phrase and its constituent words

The most objective measures of the process of speech production have been obtained by studying the system in reverse, by studying its breakdown; making inferences about the process by working backwards from the audible output, and analyzing the speech errors contained therein. Looking at how the system breaks down elucidates the independence of the stages of the process. Speech errors occur only within one and never across levels of organization, and are thus excellent markers of the way that the system breaks down. For example, if unit ‘A’ can exchange with ‘B’, then both ‘A’ and ‘B’ are planned at the same stage. Additionally, whatever specific property they share is likely relevant to that level or representation of speech[3]. The fact that speech errors typically occur within and not across clauses is evidence that each clause is produced independent of other clauses. Stages defined by speech errors suggest two polar views: on one end, the view that speech is produced serially, and on the other end the view that the process consists of many, interacting nodes that process levels of representation in parallel. Outlined below are the most influential traditional models of speech production, followed by more modern models.

Serial Processing Models

[edit | edit source]Serial models of speech production present the process as a series of sequential stages or modules, with earlier stages comprising of the large units (i.e. sentences and phrases), and later stage comprising of their smaller unit constituents (i.e. distinct features like voicing, phonemes, morphemes, syllables). Tacit in these models is that the stages are independent of one another and that there is a unidirectional flow of information. This means that in these models there is no possibility of feedback for the system. The following models are presented in the order that they evolved.

Fromkin's Five Stage Model

[edit | edit source]Victoria Fromkin was an American linguist who studied speech errors extensively. Based on a critical analysis of her own research on speech errors, she proposed a model of speech production with stages that produced semantics, followed by syntax, and finally by phonological representation as follows:

1) The intended meaning is generated

2) Syntactic structures are formulated

3) Intonation contour and placement of primary stress are determined

4) Word selection

a. Content words inserted into syntactic frame

b. Function words and affixes added

5) Phonemic representations added and Phonological rules applied

In the first stage of this model, the message to be conveyed is generated and then the syntactic structure is created, including all the associated semantic features. If the structure were not established prior to word selection, this model would not account for the fact that word switches only occur within and not across clauses [4]. This order of processing, which implies that the syntactic structure is available early on, also accounts for the fact that word exchanges only occur between words of the same grammatical function (i.e. verbs will switch with verbs, but never with nouns). In the third stage of this model, the placement of the primary stress within the syntactic framework is determined, but not which syllable it belongs to. At the fourth stage of this model, words are selected starting with content words. Since the intonation contour of a phrase is maintained despite word exchange errors as seen in the following example, intonation contours must be selected before the words that fit in it.

1) “The ace of Spades”

2) “the spade of Aces”

- (italicized words indicate the location of the primary stress)

Above, even though the words ace and spade have been reversed from the first phrase to the second, the stress remains in the same position. The order of the sub-stages within the fourth stage of the model, content words selected prior to function words, is also supported by word exchange speech errors, as seen in the following example:

1) “A weekend for maniacs” (/s/)

2) “A maniac for weekends” (/z/)

[5]

The above is an example of phonological accommodation, the process by which the errors accommodate themselves to their linguistic environment [4]. In this case the phonological form (with the correct voicing) of the function words (/s/ vs. /z/) follows the phonological rules associated with the content words. Take this second example:

1) “An apple fell from the tree”

2) “A tree fell from the apple”

In this case, the content words were exchanged, and the feature words “A” and “An” followed the phonological rules of their content words regardless of the switch; that is, the feature word does not move with its misplaced stem. Both of these examples can be taken as evidence that the content words and feature words are not only processed independently, but that the content words are selected prior to the selection of feature words, which explains why the feature words can accommodate for the word exchange.

Fromkin’s model accounts for many speech errors, and claims that semantics are processed prior to syntax, which is processed prior to phonology. These postulations have been recently validated using event-related potentials (ERPs) associated with picture-naming tasks [6]. In this experiment, subjects had to make animal-object discriminations (accessing semantic information), and vowel-consonant discriminations (accessing phonological information), and it was found that conceptual processing precedes phonological processing by about 170 ms [6]. The Fromkin Model is limited in that is strictly top-down and does not involve any kind of feedback system, which means that it does not account for the phonological bias or lexical bias, the tendency for phonological speech errors to result in real words more often than in non-words [7]

Garrett's Model

[edit | edit source]Like Fromkin’s model, Garrett’s model also proposes serial processing of speech production, from semantic to phonological, while stressing that content words are selected prior to and independent of the function words. In his model, Garrett proposes three levels of representation: the Message Level, where the intended message is generated, the Sentence Level, where the sentence is formed, and the Articulatory Level, where motor commands instruct speech organs to produce the appropriate audible output. This model is often referred to as Garrett’s Two-stage model, because the Sentence level is further subdivided into two separate levels or stages: the Functional level, wherein the speaker selects the appropriate words to convey the intended message (Lexicalization) as well as the word order and grammatical rules that govern those words (Syntactic planning); and the Positional level, which is concerned with the sound of the output string and is very pronunciation-oriented.[8]

*Fig. 1. Adapted from Garrett 1980[9]

Garrett justified the two separate stages by, once again, consulting speech errors. More specifically, she notes that meaning-related errors (word switches of content words with the same grammatical function) occur during the functional stage, and form-related or functional errors (morpheme switches and errors of grammatical sound) occur during the positional stage of processing. He also justified the positional stage as being independent of the functional stage because of phonological accommodation. This can be seen in the following example:

- "A giraffe stepped on an alligator"

- "An alligator stepped on a giraffe"

In this case, the message (1) was inappropriately articulated, and the word giraffe was replaced with alligator at the functional stage of the model. The fact that the morpheme “a” was replaced with “an” to correctly follow the phonological rules associated with words beginning with vowels can be taken as evidence that morpheme selection occurs after the word and phrase structure have already been selected. This model also accounts for word exchanges that take place across large distances (first/last content-word switches), because the positioning is established after the words are retrieved, while sound exchanges can only occur over short distances because their positioning is established prior to the sounds being specified.

Serial models like the Fromkin and the Garrett model fail to account for phrase blends, whereby at least two semantically related phrases are retrieved simultaneously (Example 1 below). Neither model accounts for speech errors where a wrong word is selected that is phonologically similar to the target word in regards to the initial phoneme (Example 2 below). Finally, both models fail to account for cognitive intrusions like those seen in Freudian slips (Example 3 below).

- " I Love you more than anything/love you very much" becomes "I love you more much"

- "Thank you for all of your kind remarks, your feedback is very valuable becomes"Thank you for you king regards..."

- "What time is this class over?"becomes"What time is this lunch over?"

The third example is spoken by a student wanting to know when the class before lunch was over. In this case, the student's thoughts about lunch invaded his/her sentence production; they really wanted to know what time the class and not the lunch was over. To account for the types of errors in the above three examples, a model would need to show how two alternative messages can be processed in parallel, not serially.

The Bock and Levelt Model

[edit | edit source]

This model consists of four levels of processing,[10] the first of which is the Message level, where the main idea to be conveyed is generated (Fig. 2). The Functional Level is subdivided into two stages. The first, the Lexical Selection stage, is where the conceptual representation is turned into a lexical representation, as words are selected to express the intended meaning of the desired message. The lexical representation is often termed the Lemma, which refers to the syntactical, but not phonological, properties of the word.[10] The Function Assignment stage is where the syntactical role of each word is assigned. At the third level of the model, the Positional level, the order and inflection of each morphological slot is determined. Finally, in the Phonological encoding level, sound units and intonation contours are assembled to form lexemes, the embodiment of a word's morphological and phonological properties,[11] which are then sent to the articulatory or output system.[12]

Fig. 2

Each level of this model is functionally distinct from the others and this distinction is illustrated by the types of speech errors that occur at each level. For example, substitution errors of words within the same semantic ballpark (i.e. substituting yell> when the target was shout) occur at the Lexical Selection stage, where as speech errors involving syntactic function (i.e. verb tense, number, aspect) only occur at the Function Assignment stage (i.e. saying "I likes candy" instead of "I like candy"). At the Positional level, errors of misallocated and stranded inflection or derivational endings occur. For example, when the sentence “He poured some juice” is accidently pronounced as “He juiced some pour”, the stem morpheme pour is re-allocated to the end of the sentence, stranding its derivational ending ed at the beginning of the sentence.[12]

The Bock and Levelt Model can account for most speech errors, and their insertion of a self-monitoring component to the model made it also account for filtering effects, accommodation beyond the level of phonemes, and also provided a functional explanation for hesitations and pauses (the time it takes for the self-monitoring system to accurately filter and accommodate errors). Although this model incorporates bidirectional flow of information, it still involves discrete serial processing in contrast to parallel-processing models, which attempt to account for these errors by means of forward and backward spreading of activation through parallel paths. This brings us to the parallel models of speech production.

Parallel-Processing Models

[edit | edit source]In these non-modular models, information can flow in any direction and thus the conceptualization level can receive feedback from the sentence and the articulatory level and vice versa (Fig. 3). In these models, input to any level can therefore be convergent information from several different levels and in this way the levels of these models are considered to have interacting activity. Within a phrase, words that are retrieved initially constrain subsequent lexical selection. For example, if the first word in a phrase is a noun the subsequent words cannot be nouns and still produce a grammatically correct sentence. This property necessitates a model whose output can interact with the initial message and demands parallel processing of speech production.

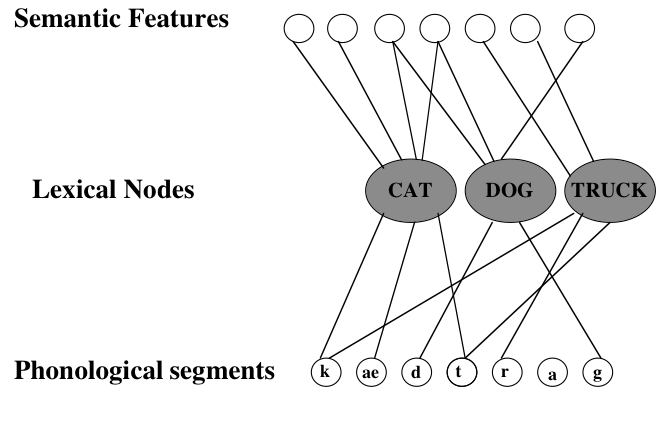

The Dell Model

[edit | edit source]Dell’s model of spreading activation of lexical access is also commonly referred to as the Connectionist Model of speech production. Dell’s model claims, unlike the serial models of speech production, that speech is produced by a number of connected nodes representing distinct units of speech (i.e. phonemes, morphemes, syllables, concepts, etc.) that interact with one another in any direction, from the concept level (Semantic level), to the word level (Lexical selection level) and finally to the sound level (Phonological level) of representation (Fig. 3).[13][14] For example, the node for the phoneme /t/ might be connected in one direction to all morphemes containing that phoneme (i.e. cat, truck, tick, tock, tap etc.), and in another direction to the distinct features of that phoneme (i.e. voiced vs. fricative). In this same fashion, words with similar meaning will be connected to a common semantic node. For example, in the words cat and dog, which have several common semantic features (i.e. are both animals, have fur, can be domesticated) would share a common node within the semantic network (Fig. 3).[15] .

.

Figure 3. adapted from Dell and O'Seqghdha (1994)[15]

When a word is selected, all of the nodes representing that word's constituent morphemes, phonemes, semantics and syntax are activated and this activation spreads to the adjacent nodes until one node, the node that is most highly activated is selected for the output string. Since nodes within the same level or representation are competing for activation, the wrong node is often activated. For example, with the sentence “Put your boots on!”, one would certainly think that the speaker meant “Put your boots on your feet!”. As a result, the speaker may have activated the concept nodes that are semantically related to the target word boots, such as the node representing feet. This could result in the activation of the feet node and the production of the incorrect sentence “Put your feet on”. Figure 4 illustrates how this activation could occur the word boots and skates, which share some semantic properties. Both words would activate units with related semantic properties such as footwear, shoe, feet, snow or winter.

Fig. 4.

Based on similar figures in Dell & O’Seaghdha (1994),[15] Dell's model covers syntactic coding and morphological coding (specification of the words constituent morphemes) but focuses primarily on phonological encoding (specification of morphemes constituent phonemes). In contrast to serial models of speech production, Dell’s model can account for word blends, phrase blends, phonemic slips, and cognitive intrusions. This model explains these errors as the simultaneous activation of nodes that are either semantically or phonetically similar to the target. With phoneme slips, for example, nodes of phonemes that are similar to the target, sharing common distinct features (i.e. voicing vs. fricative), are the ones that will compete with the target node for activation, while non-similar phoneme nodes will not be activated at all. Dell’s model explains the results of a study by Dell and Oppenheim (2007),[16] who exposed subjects to lists of primer phrases, induced phoneme exchanges, and recorded the nature of the output phrases. They found that subjects produced phoneme exchanges resulting in output that was semantically similar to priming phrases more frequently than phoneme exchanges resulting in semantically unrelated output.[16] The Dell model justifies these findings on the basis of semantic priming. Because the model consists of word selection prior to phonological encoding, it accounts for tip of the tongue speech errors, where speakers can often identify the syntactical, and even morphological properties of the intended word, but still not be able to encode the phonology.[17][18]

What About The Lexical Bias Effect?

[edit | edit source]Dell’s model can account for the lexical bias effect, which is the tendency for phonological speech errors to create true words more often than non-words [19]. Dell’s model explains it as backward spreading of activation that will favor true words, for which there are morpheme nodes, over non-words, which do not have morpheme nodes. Dell discovered that the lexical bias effect is stronger at slower speech rates than at higher speech rates and accounts for this by saying that backwards spreading takes time, which is not available in fast speech [19]. Garrett argues that this speech-rate effect is due to the time required by the self-monitoring stage of the Garret Model, and that the disparity in the frequency of the effect at low and high speech rates is better explained by the fact that at high speech rates, the speaker's self-monitoring system is unable to monitor output and filter out non-words.[9]

Conclusion

[edit | edit source]There are many computational models of speech production that could be discussed that exceed the scope of this chapter, and many of them focus on the articulation of sounds, words and phrases rather than Fromkin's “mind to mouth” mystery (1973) that early psycholinguists attempted to model. There are also many models that contrast monolingual and bilingual speech production, gestural vs. verbal and natural vs. artificial speech production (described in separate chapters of this online text) and until there is a computer created that can first think independently, and then learn to produce language with the same generative capacity seen in human languages to express those independent thoughts, there will continue to be no, single, stand-alone model that satisfies all dimensions of the process of speech production.

Learning Exercise

[edit | edit source]Critical Thinking

[edit | edit source]It may be helpful to quickly review the "Speech Errors" chapter of this textbook. You can find it by clicking on the link below

[[1]]

1. What do the following examples of speech errors/phenomena tell us about the process of speech production? What stages and sub-stages of each model do these examples provide evidence for? Though some examples provide evidence of more than one stage, please identify at least one stage per example. It might be helpful to say them out loud to yourself to see how intonation or pronunciation are affected by the error/phenomena in each example.

2. Which of the above speech errors are not accounted for by the Fromkin Model or Garret model? How are these errors better accounted for by the Bock and Levelt Model and by parallel processing models of speech production?

3. a) Using the Dell model as a reference, draw a semantic network that demonstrates how the following speech errors/phenomena might occur (assume error and target nodes are not seperated by more than 3 nodes). Remember that in this model there are nodes for many aspects of the utterance including phonemes, morphemes, syllables, concepts etc.

b)Using the semantic network below, come up with plausible examples of the following kinds of speech errors/phenomena following the principles of a connectionist model of speech production:

A. Freudian slip

B. Phoneme switch

C. Word switch

D. Morpheme switch

E. Perseveration/anticipation

F. The lexical bias effect

4. How do the parallel and serial models of speech production explain the lexical bias effect differently? How does each type of model justify there being more of an effect at slow speech rates than at high speech rates?

Home Lab

[edit | edit source]In order to get an idea of how language processing can be assessed, try the following pseudo-experiment. Please read these instructions carefully before opening the video hyperlink. The video contains 40 word-pairs. Your task is to decide how the two words of each pair are related, either semantically (similar in meaning), phonetically (consisting of similar phonetic units) or not related at all. Before pressing the link for the video, please take out a piece of paper and create a tally with the following headings: Semantically Related, Phonetically Related, and Not related. Once you start the video, focus on the cross in the middle of the screen. Word pairs will be presented for a few seconds, followed by the focal point (cross), followed by the next pair; this will continue for 40 word-pairs. Look at the word-pairs quickly and decide how they are related. Put a tick in the appropriate column of your tally sheet. For each word pair, you must choose one answer only, placing one tick on your tally sheet per pair such that you have 40 ticks in total. Once the video is over (it will say "Congratulations You Are Finished. Tally Time!"), tally up each column. Make sure to let the video completely load before pressing play. Take this time, (while it loads), to reread the instructions which are repeated quickly in the video. Because the instructions appear quickly, pause the video and take the time to read them and completely understand them before pressing play. Give it a try! Press the following hyperlink to access the video file [2]

Did you find any difference between the total number of times that you reported semantic compared to phonetic relatedness? What does this tell you about the order in which you process semantics and phonetics? In this case, the word list consisted of 10 semantically related word-pairs, 10 phonetically related word-pairs, 10 semantically and phonetically related word-pairs, and 10 non-related word-pairs to serve as controls. If we do indeed process the semantics prior to the phonetics of a word as all of the above models suggest, the word-pairs that were both semantically and phonetically related would more often be reported as being semantically related than phonetically related. Do not be dismayed if you did not find this result, remember this is simply a pseudo-experiment. In complete studies, the stimuli would be much more extensive (~200 word pairs not 40). Try it with a friend and see what their results indicate. This exercise is also intended to encourage you to think critically about experimental designs. Can you think of a few limitations to the design of this pseudo-experiment? How could you change the experiment to address these issues?

References

[edit | edit source]- ↑ Hinojosa, J.A., Mendez-Bertolo, C., Carretle, L., and Pozo, M.A. (2010) Emotion modulates language production during covert picture naming. Neurophysiologia. 48:1725-1734.

- ↑ 2.0 2.1 Clarl, H.H., and Clark, E. (1977). Psychology and language: n introduction to psycholingsuitics. New York: Harcourt Brace Javanovich.

- ↑ Fromkin, V.A. (1971). The nonanomalous nature of anomalous utterances. Journal of Linguistics. 4:47-68.

- ↑ 4.0 4.1 4.2 Fromkin, V. A. (1973). Speech Errors as Linguistic Evidence. The Hague, Netherlands: Mouton.

- ↑ Clark, H.H., and Clark, E. (1977). Psychology and language: An introduction to psycholingusitics. New York: Harcourt Brace Javanovich.

- ↑ 6.0 6.1 Rodriguez-Fornells, A., Schmitt, B.M., Kutas, M. and Munte, T.F. (2002). Electrophysiological estimates of the time course of semantic and phonological encoding during listening and naming. Neuropsychologia. 40:778-787.

- ↑ Hartsulker, R.J., Corley, M., and Martensen, H. (2005). The lexical bias effect is modulated by context, but the standard monitoring account doesn’t fly: Related beply to Baars et al. (1975). Journal of Memory and Language. 52:58-70.

- ↑ Garrett, M.F. (1975). Syntactic process in sentence production In G. Bower (Ed.). Psychology of learning and motivation: Advances in research and theory. 9:133-177.

- ↑ 9.0 9.1 Garrett, M.F. (1980). The limits of accommodation. In V. FRomkin (Ed.), Errors in linguistic performance. (pp. 263-271). New York: Academic.

- ↑ 10.0 10.1 Bock, K., and Levelt, W.J.M. (1994). Language production. Grammatical encoding. IN M.A. Gernsbacher (Ed.). Handbook of psycholinguistics (pp.741-779). New York: Academic Press

- ↑ T.B. Jay. The psychology of language. New Jersey: Pearson Education.

- ↑ 12.0 12.1 Indefrey, P., and Levelt, W.J.M. (2000).The neuroal correlates of language production. In M.S. Gazzagina(Ed.).The New Cognitive Neuroscience(2nd Ed., pp. 845-866).Cambridge: MIT Press.

- ↑ Dell G.S. (1986). A Spreading activation theory of retrieval and sentence production. Psychological Review. 93:283-321

- ↑ Dell, G.S., Change, F., and Griffin, Z.M. (1999). Connectionist models of language production: lexical access and grammatical encoding. Cognitive Review. 23:517-542.

- ↑ 15.0 15.1 15.2 Dell, G. S., & O'Seaghdha, P. G. (1994). Inhibition in interactive activation models of linguistic selection and sequencing. In D. Dagenbach & T. H. Carr (Eds.). Inhibitory processes in attention, memory, and language (pp. 409-451). San Diego: Academic Press.

- ↑ 16.0 16.1 Oppenheim, G.M., and Dell, G.S. (2007). Inner speech slips exhibit lexical bias, but not the phonemic similarity effect. Cognition. 106:528-537.

- ↑ Caramazza, A. & Miozzo, M. (1997). The relation between syntactic and phonological knowledge in lexical access: Evidence from the 'tip-of-the-tongue' phenomenon. Cognition, 64, 309-343.

- ↑ Vigliocco, G., Antonini, T. & Garrett, M.F. (1997). Grammatical gender is on the tip of Italian tongues. Psychological Science, 8, 314-317.

- ↑ 19.0 19.1 Dell, G.S. (1985). Positive feedback in hierarchical connectionist models: Applications to language production. Cognitive Science. 9:3-23