Gradient descent

Introduction

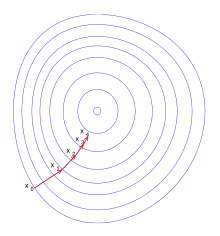

[edit | edit source]The gradient method, also called steepest descent method, is a method used in Numerics to solve general Optimization problems. Here one starts (at the example of a minimization problem) from an approximate value. From this one proceeds in the direction of the negative gradient (which indicates the direction of steepest descent from this approximate value) until one no longer achieves numerical improvement.[1]

Wiki2Reveal

[edit | edit source]This page can be displayed as Wiki2Reveal slides. Single sections are regarded as slides and modifications on the slides will immediately affect the content of the slides.

Remark Convergence

[edit | edit source]The method often converges very slowly, since it approaches the optimuÏm with a strong zigzag course. For the solution of symmetric positive definite linear system of equations, the method of conjugate gradients offers an immense improvement here. Gradient descent is possible with the hill climbing algorithm (hill climbing).

The optimization problem

[edit | edit source]The gradient method is applicable when the minimization of a real-valued differentiable function is involved; i.e., the optimization problem

This is a problem of Optimization without constraints, also called unconstrained optimization problem.

Essential steps

[edit | edit source]- Gradient points in the direction of the steepest increase.

- The negative gradient therefore points in the direction in which the function values of decrease.

- It can happen that one jumps over the local minimum of the function during an iteration step. Then one would decrease the step size accordingly to further minimize and more accurately approximate the function value of .

Termination condition

[edit | edit source]- Termination condition for the gradient descent method would be when we have found a location with the iteration at which the gradient of is 0

- .

In general, the gradient of a place for the -th iteration step is defined as follows via the partial derivatives:

- .

Example of a gradient

[edit | edit source]Sei :

- .

This allows us to calculate the gradient at a given point in the domain of definition:

Example - normalized gradient

[edit | edit source]Using a gradient different from the zero vector , one can create a normalized "negative" gradient:

The procedure

[edit | edit source]A simplified step size calculation is used as an introduction to the gradient descent procedure.

- The procedure terminates if the gradient is the zero vector.

- If the gradient is not the zero vector, the negative gradient is first normalized to length 1 and multiplied by the step size .

- The step size is halved if after the iteration step the function value (e.g. cost) does not decrease.

- Another termination condition for the iteration is if the step size falls below an accuracy limit (i.e. ).

Start of the optimization

[edit | edit source]As starting point a point is chosen from the definition domain of the function , for which one wants to approximate local minima with the gradient descent method.

Direction of the steepest descent

[edit | edit source]Starting from a starting point resp. from the current point for the next iteration step, the direction of steepest descent is determined by , where is the Gradient of at the location . This points in the direction of the "steepest increase". The negative sign in front of the gradient ensures that one moves with the iteration steps in the direction of the strongest decrease (e.g. minimization of the cost function/error function ).

Normalization of the direction vector

[edit | edit source]The simplified interation procedure terminates at the condition if . Otherwise, the direction vector is normalized for the following iteration step (this is optional to normalize the step size in the learning algorithm) :

</math> with Euclidean norm

Iteration step

[edit | edit source]

Formally, one notates this iteration step as follows:

If there is no improvement, the step size is decreased (e.g., halved).

Setting the step size

[edit | edit source]The step size is used for the next iteration step until the cost function increases with the subsequent step. In this introductory example, the step size is halved. Formal

Alternative step size reduction

[edit | edit source]In general, the step size reduction can also be replaced by a factor with over

can be replaced.

Step size definition per iteration step

[edit | edit source]Here is the step size in the jth iteration step. This step size must be determined in each step of the iteration procedure. In general, there are different ways to do this, such as regressing the step size determination on a one-dimensional optimization problem. The here chosen step size optimization is chosen as an introduction to the topic.

Gradient descent in spreadsheet

[edit | edit source]In the following ZIP file from GitHub is a LibreOffice file with an example gradient descent for the cost function:

- .

The cost function has infinitely many local minima on its domain of definition . By definition, the minimum of the cost function is -1. In each table row, we perform an iteration step and check that the cost function is actually decreasing after the iteration step.

Regression to a one-dimensional optimization problem

[edit | edit source]One method is to determine by minimizing the function on the (one-dimensional) "ray" that points in the direction of the negative gradient starting from . The one-dimensional function to be minimized is defined as follows.

- mit

One computes in this case the with such that becomes minimal. i.e.:

This is a simple, one-dimensional optimization problem for which there are special methods of Step size determination.

Step sizes and iterated step size reduction

[edit | edit source]Another method is to make dependent on the minimization of the function , i.e., on the condition . If with the iteration step the function value with a starting step size is not decreased, one decreases the step size e. B. with with further until the step size starting from in the direction of the negative gradient actually yields a function value and sets .

If one has reached a place with in the iteration procedure, possibly a local extreme place of is present (check or abort of the iteration procedure).

Termination condition

[edit | edit source]A key criterion for a termination condition is that . As in real one-dimensional analysis, there must be no local minimum at this point (one-dimensional saddle point, multidimensional, e.g., saddle surface). If for is twice continuously differentiable and the Hessian matrix is positive definite at this point, there is sufficient criterion for a local minimum in . This is output and the iteration is terminated.

If the algorithm is executed on a computer, a possible further termination criterion is the step length, if this becomes smaller than a lower limit with the condition .

Furthermore, the gradient descent procedure can be terminated if the improvement of the optimization of in the interation steps becomes smaller than a lower bound.

Such termination criteria algorithmically ensure that the gradient descent procedure does not end up in an infinite loop of iterations.

- CAS4Wiki Start-Link - Derivatives and Gradient: Derivatives and Gradient

Videos

[edit | edit source]- contour lines, gradient, vector field, vector analysis, ... Youtube video (07/21/2015) by Daniel Jung.

- gradient and total differential Youtube video (21.07.2015) by Daniel Jung

Literature

[edit | edit source]- ↑ „Gradientenverfahren“. In: Wikipedia, Die freie Enzyklopädie. Bearbeitungsstand: 24. September 2016, 13:24 UTC. URL: https://de.wikipedia.org/w/index.php?title=Gradientenverfahren&oldid=158180650 (Abgerufen: 21. November 2017, 11:49 UTC)

- ↑ 2.0 2.1 Gradient Descent with Spreadsheet, Jörg Rapp, Engelbert Niehaus (2018) GitHub Repository https://github.com/niebert/GradientDescent - ZIP: https://github.com/niebert/GradientDescent/archive/master.zip (last accessed 2019/04/28)

See also

[edit | edit source]Page Information

[edit | edit source]You can display this page as Wiki2Reveal slides

Wiki2Reveal

[edit | edit source]The Wiki2Reveal slides were created for the Numerical Analysis' and the Link for the Wiki2Reveal Slides was created with the link generator.

- This page is designed as a PanDocElectron-SLIDE document type.

- Source: Wikiversity https://en.wikiversity.org/wiki/Gradient%20descent

- see Wiki2Reveal for the functionality of Wiki2Reveal.