Psycholinguistics/Reading

Reading is the process of decoding a set of written symbols that have been assigned linguistic meaning, for the purpose of communicating ideas. This chapter will explore reading from a psychological perspective, detailing the components of written language that allow widespread understanding and communication, how people initially learn to read, the roles of phonological skill and other predictors of reading success. As well, it will explore major cognitive theories of reading and the physical correlates of online reading processes. It should be noted that this discussion of reading is heavily biased toward alphabetic languages in general, and English in particular.

Literacy and Thought

[edit | edit source]Literacy – the ability to understand, generate, and manipulate written text – is highly important for day-to-day functioning in most modern societies. While language itself, particularly expressive ability (through speech or signing) may be an innate human trait,[1][2], the ability to read is not. Written communication is still very new from an evolutionary standpoint, having only developed within the past 10,000 years[3], and all new readers require extensive training to gain this skill.

This separation from spoken communication has led to suggestions that reading is not simply the process of converting written representations back into speech, but an entirely separate way for a speaker to perceive and understand language.[4] According to David Olson, learning to read is simultaneous with the discovery of organized linguistic structures (1996), and he points to the effects of literacy on many different aspects of cognition and linguistic performance to demonstrate this. For example, readers of alphabetic languages – which employ a set of graphemes that are linked to phonemes - begin to perceive words not just as discrete objects, but, in fact, as made up of those constituent letters. Literacy enables readers to complete phonemic segmentation tasks – adding or deleting phonemes from a word, such as deleting /f/ from fish to yield ish – which are impossible for nonreaders.[5][6] Children demonstrate this phonemic awareness in attempts to spell unknown words: they may use letters whose names sound like the phonemes they are attempting to represent (e.g. spelling lady as lade), implying that they are beginning to process speech in terms of new categories given by letter names.[7] Learning to read also gives readers the tools to judge grammaticality based on written cues rather than meaning gleaned from aural comprehension, improving performance on grammatical judgment tasks.[8][9] This goes hand in hand with the development of proper punctuation use – an ability that also improves with greater literacy, since it is tied to understanding of the grammatical structure of clauses and sentences. Of course, acquisition of these skills is gradual, developing along with mastery and fluency in beginning readers. Nevertheless, evidence appears to show that literacy has substantial effects not only on perception and performance on linguistic tasks, but also on a speaker's relationship to the structure and function of their native language.

Learning to Read

[edit | edit source]A well-regarded model of reading development originated by Dr. Linnea Ehri, a graduate professor at CUNY, supports data from studies of children (ranging from preliterate to fluent readers) that reveal broad developmental phases through which they must progress. While by no means the only possible model of reading development, and perhaps inapplicable in the face of bilingualism[10], the major stages of learning she describes provide a useful and influential framework for thinking about reading development[11] and continue to be used as a basis for further research.[12] Using a conceptually modified version of an earlier stage model,[13] Ehri posits that when learning an alphabetic language - such as English or German, which use graphemes to represent phonemes - children begin as logographic readers, move on to novice and mature alphabetic reading, and eventually progress to orthographically fluent reading.[14] Although each phase of development tends to have progressively more influence on the strategies used to decipher text, these phases should not be seen as entirely discrete, sequential stages. They are more bidirectionally related groups of decoding strategies and linguistic knowledge that are gained over time, expanding the reader's arsenal of reading skills.

Logographic Reading

[edit | edit source]

Logographs are visual symbols that stand for entire morphemes or words, rather than individual phonemes. While mature reading in languages such as Chinese and Korean is based upon logographic systems, readers of alphabetic languages use logographic techniques to begin the process of learning to recognize written words. Logographic reading involves the recognition of text and letters as meaningful units and mapping particular sounds to visual symbols. This is accomplished by recognizing salient features of words - such as letter shape, logo colours, and other arbitrary visual markers - in order to label the word in semantic memory. Since at this stage, word identification is entirely reliant on these markers, this phase is referred to as visual-cue reading.[16] Direct connections between letters and sounds are not yet involved, so recognition is limited to whole words, which may be reported as semantic equivalents rather than specific pronunciations of the presented words. Logographic readers tend to show knowledge of words that are inextricably linked to highly familiar visual features, such as their own names or common corporate logos. However, they have no strategies for recognizing unfamiliar words.

Alphabetic

[edit | edit source]Alphabetic readers have begun to use grapheme-phoneme relationships to decode words. For the first time, readers recognize that words are not just holistic visual symbols, but are made up of constituent letters that correspond to particular sounds. There is a shift away from linking whole words with general semantic information in memory, and toward the more systematic method of connecting spellings with unique pronunciations. This phase can be divided into two substages of development: novice and mature reading. Novice alphabetic readers rely on phonetic-cue reading[16][17], in which certain salient phonetic features of words are used as memory cues. The first and/or last letters of the word and their corresponding phonemes are often used, such as /m/ and /k/ in milk. In this way, letters begin to be directly connected to pronunciation in a reader's mental lexicon.

However, phonetic cues are not sufficient to differentiate words that share some letters and sounds. Mature alphabetic readers begin to use phonological recoding to identify words: they use implicit rules to draw consistent connections between written letters and spoken phonemes, and blend those constituents into whole words, a process known as cipher reading.[18] This ability becomes progressively more fluent with practice, shifting from slow, verbal "sounding-out" to quick, internal blending of phonemes.[19] Strategies of decoding also progress with more exposure to print: readers begin with sequential decoding, in which single graphemes correspond to single morphemes, and are later able to implement hierarchical decoding, in which graphemes label preceding or following phonemes (e.g. the i in city and the e in cell both label the preceding c as an /s/).[20] Eventually, phonological recoding is used to establish words in memory and allow identification of words by sight. Words are processed using recoding, and eventually the pattern of letters is "bonded" in memory to phonetic knowledge of the word. Recognition of the word spelling then automatically maps to its pronunciation and meaning, without the use of phonological rules.

Orthographic

[edit | edit source]Orthographic readers continue to use sight-word strategies, but their recoding skills undergo "progressive lexicalization".[21] Readers learn to consolidate[14] grapheme-phoneme patterns that occur across many words, enabling them to decode words based on larger units than before, such as morphemes and letter clusters. Experience with reading, and progressively automatizing the phonemic recoding of these common letter patterns, increases recoding efficiency. As well, using larger units within words to ease recoding eases the establishment of sight words in memory and speeds up later retrieval. It also provides more strategies for identifying unfamiliar words, such as drawing analogies to known words with similar morphemic relationships or spelling (e.g. pronouncing the nonword pednesday as penns-day rather than ped-ness-day, indicating an analogy to Wednesday[20]).

Major Predictors of Reading Success

[edit | edit source]Phonological Awareness

[edit | edit source]Phonological awareness (PA) is the awareness of and ability to manipulate sounds within words.[22] PA consists of both phonological synthesis – the ability to blend distinct phonemes into cohesive words – and analysis – the ability to break words into constituent parts – but the the two abilities are inextricable from one another.[23] Early PA is extremely important in later mastery of reading in alphabetic languages: PA development predicts reading progress, studies indicate that preliterate phonological training improves reading performance, and reading disabilities are retrospectively associated with phonological problems.[22] One reliable indicator of PA and its link to reading ability is invented spelling: one study found that performance on PA tasks accounted for 93% of variance in guessed spellings of unknown words.[24] More phonologically accurate guesses on these tasks predict better early reading skills. Conversely, limited PA skill interferes with later acquisition of grapheme-phoneme correspondence rules and word recognition skills.[3]

Linguistic Consistency

[edit | edit source]Phonological abilities are generally associated with reading performance in the early years of learning, but this association declines in the later grades of elementary school. de Jong et al.'s study found that differences in reading acquisition among Dutch students after the end of grade 1 could be explained by individual reading ability rather than phonological ability. However, phonological influence on reading acquisition in American children was observed for much longer, until grade 4. This is likely due to the difference in grapheme-phoneme consistency between Dutch and English: Dutch has a much more consistent, or transparent, orthography than that of English, which is highly irregular.[25] Accuracy in word decoding takes much more time to develop in learners of non-transparent languages, since fluent phonological recoding depends on the ability to reliably map written letters to pronounced sounds, a process made much more difficult when graphemes do not always represent the same phoneme. Indeed, in a 2003 analysis of major European languages, English was ranked as the most orthographically inconsistent.[26] Accordingly, a 2004 study found that by the end of grade 1, German and Dutch children showed 85% accuracy in reading nonwords - and Swedish children 90% accuracy - while English readers lagged significantly behind at 50% accuracy and did not catch up until grade 4.[27]

Models of Reading

[edit | edit source]Multiple theories have been proposed to explain the cognitive processes underlying reading ability and comprehension. Although models abound in the literature, each giving slightly different precedence to visual input (bottom-up) and conceptual context (top-down), this chapter's limited space will focus on the connectionist and duel-route cascaded (DRC) models. These theories have been continuously investigated and developed over the past two to three decades. Both are computational models of cognitive theories, focusing on word recognition and processing. This approach - implementing a theory of cognition in a computer program - allows a real-life examination of how closely the model's output matches the human behaviour it is meant to explain.

Connectionism

[edit | edit source]

Multiple variations on connectionist models have since been devised, but the original model of visual word recognition and pronunciation was put forward in 1989.[28] This model is based on the principle of parallel distributed processing (PDP): processing is distributed across a network of working units, and is conducted in parallel rather than sequentially. They posit a three-layer model, composed of sets of orthographic and phonological units, and an interspersed level of "hidden" units that function only as mediators and do not receive exogenous input or produce output. The aim of this model is to create a "minimal model" of reading aloud and making lexical decisions, in which little is hard-wired and most processing structure is left to be developed through learning. Its key feature is that it uses a single procedure for regular, irregular, and non-words when mapping orthographic representations to phonology, rather than dividing processing into multiple routes depending on the category of input.

There is no discrete lexicon of known words, and no underlying set of pronunciation rules to be consulted: computation uses learned experience with grapheme-phoneme correspondence instead. Connections between units are weighted to increase or decrease the propagation of activation, and this weighting is modified by learning. Initial, random weights are modified by backpropagation: the model's results are compared to correct output, and weighting is shifted accordingly to modify phonological output. Orthographic units are activated by particular clusters of letters, while phonological units are activated by clusters of phonemes, and the hidden units mediate between the two sets of representational units. In this model, lexical decision tasks (dividing stimuli into words and nonwords) are completed by analyzing patterns of activation: real words have more recognizable orthographic and phonological patterns than nonwords.

This system is similar to the way in which people associate orthographic and semantic information with phonological representations of words: nodes associated with the appearance and meaning of a word, as well as its sound, may all be activated to some extent upon its presentation. As well, the level of grapheme-phoneme correspondence in different languages can be represented through varied weights on connections between orthographic and phonological units. However, this model is also limited to monosyllabic words (though this has recently been extended to polysyllabic words[29]) and cannot compute syllabic stress, nor can it address the effects of semantic priming or context.

DRC

[edit | edit source]The dual-route cascaded model is a computational model of word recognition and reading aloud.[30][31] In contrast to connectionist models, DRC proposes a hard-wired cognitive structure, consisting of two discrete cognitive paths for reading: the lexical nonsemantic route and the grapheme-phoneme correspondence route. Both routes proceed through multiple interacting levels, each made up of meaningful units (e.g. letter features, whole letters, phonemes, or words).

(Chart created by Bastian Schledde)

The lexical nonsemantic processing route is used to identify and read known words aloud. The basic letter features present in a word activate all relevant letter units at once. A letter activated in a certain position within the word increases the activation of all orthographic (whole-word) units with a letter in that position. The combination of letter activations eventually activates one particular orthographic unit, which excites the corresponding phonological lexicon unit (linking known word meaning with pronunciation). This phonological unit activates the relevant phoneme units, which produce spoken output sound-by-sound.

Unfamiliar words or nonwords are processed via the grapheme-phoneme correspondence (GPC) route, the relatively slower pathway of word identification. This route converts individual written letters into phonemes using GPC rules, by mapping letters or sets of letters onto their single corresponding phonemes. The visual features of an unknown word activate corresponding feature and letter units, as in the lexical nonsemantic route, and the GPC route sequentially matches each letter with a phoneme. Once all the phonemes meet a certain threshold of activation, they will be combined into a pronunciation. This route bypasses orthographic and lexical units, relying entirely on "sounding out" words for identification.

The DRC model is highly successful in tests of reading: it successfully read 7,980 of 7,981 words in its lexicon aloud when they were presented. In addition to modeling reading aloud, it can model both surface and phonological dyslexia by modifying the activation of each route, and can even recreate the reading delay introduced by naming the colour in which a colour name is written rather than reading the word itself (the Stroop effect). However, the model is limited to monosyllabic words, has no method for computing lexical stress, and its performance on the lexical decision task is crude at best.[31]

Physical Processes

[edit | edit source]Visual Perception

[edit | edit source]Eye movements can be tracked as indicators of cognitive processing during reading. While looking at objects, scanning a field of view, or reading text, the eyes flit back and forth in very fast movements called saccades. These movements have a latency of 150-175 milliseconds, a planning delay implying that control of saccades is in concert with comprehension of visual input. Cognitive processing focuses on the visual information taken in during the 200-300 millisecond fixations between saccades (since the speed of eye movement is too great for any visual information to be taken in during a saccade itself).[32] The purpose of these movements and fixations is to bring relevant visual information into the area of vision with the highest acuity. This region is the fovea, comprising the central 2° of the visual field, and is surrounded by the parafoveal region (ranging approximately 5° on either side of the point of fixation). Objects outside the parafoveal region fall into peripheral vision.

Fixation lengths in reading text vary greatly depending on a variety of factors. Average fixations tend to be longer when reading aloud or following along with an audio track than when reading silently, since the eyes move more quickly than the voice, necessitating frequent pauses to allow the voice to catch up.[34] In normal reading of English text, fixations last for 200-250 milliseconds on average, and the mean saccade size is 7-9 letter spaces. The reader acquires necessary visual input within the first 50-70 milliseconds of fixating on a word, without sequential letter-by-letter scanning. The type of word being read affects likelihood of fixation: content words are fixated upon 85% of the time, while function words (e.g. the and and) are only fixated upon 35% of the time [35][36] Probability of fixation also increases with word length: 2-3 letter words are fixated 25% of the time, while words with 8 or more letters are nearly always fixated upon. As well, more frequent words are more likely to be skipped[37], as are words whose possible meanings are constrained by the context of the sentence.[38] Between fixations, saccades generally move forward in the text. However, 10-15% are regressions: saccades may move backward a few letters to continue processing, or skip back by multiple words or sentences if the reader is re-evaluating the text. Thus, visual processing may consist of both first-pass (initial) and second-pass processing. In general, increased complexity of a text results in longer fixation times, shorter forward saccades, and more frequent regressions.[32]

The perceptual span refers to the size of the visual field acquiring information. Readers of alphabetic languages read left-to-right show a perceptual span with boundaries of 3-4 letters to the left of the current word, and 14-15 letters to the right.[39] The left boundary is defined by the beginning of the current word,[39] while the right boundary is defined more by the total number of letters present.[40] This asymmetry is reversed in languages read right-to-left, such as Hebrew. The perceptual span is not identical to the area in which words can be positively identified, however: in alphabetical languages, the word identification span is only 7-8 letters from the fixation point in the direction of reading, not the full 15-letter span of overall perception[40][41] The most advantageous place for fixation has been identified, in fact, and is close to the centre of a word. This optimal viewing position is the location of minimal recognition delay: the further from this area fixation occurs, the more likely a re-fixation will be.[42]

The study of eye movements involved in reading can further understanding of the cognitive processing involved in deciphering text. For example, a study of Finnish readers in 2002 used eye tracking to describe different processing strategies for reading complex, multi-topic texts. Adults were given reading tasks and grouped according to the strategies used, defined by patterns of eye movement within the text. Fast linear readers generally read forward with very infrequent regressions, mostly focused on topic headings. Slow linear readers functioned in much the same way, but with relatively lower first-pass processing speed. Nonselective reviewers, in contrast, made many regressions to previous sentences, and their regressions were unrelated to the place in topic structure of the sentences being reread. Finally, topic structure processors focused most of their fixations on topic headings and topic-end sentences, and were shown to have the greatest working-memory capacity.[43] The differences between the groups illustrated a variety of functional reading strategies in use by adult readers.

Neural Basis

[edit | edit source]

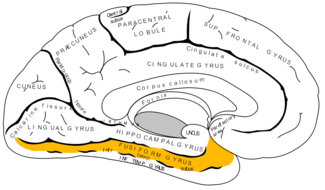

Consistent with the tendency toward left-hemisphere specificity in language processing, most of the brain areas showing activation during reading are located in the left frontal cortex. Consistently activated areas include the frontal operculum and the posterior superior, middle temporal, and inferior temporal gyri.[46] There are many cortical areas involved in various sub-processes of reading, ranging from phonological processing to linguistic motor output, but two of the most well-known in terms of function and localization are the frontal operculum and the visual word form area.

The frontal operculum, located within Broca's area, on the ventral surface of Brodmann’s areas 44 and 45 in the left inferior frontal gyrus, has been implicated in specific reading processes. It shows consistently greater activation when reading pronounceable nonwords aloud in comparison to real words, implying a role in phonological processing, and is also more active when processing low-frequency words with little grapheme-phoneme consistency.[46] Indeed, patients with lesions of this particular area show acquired phonological dyslexia (preserved ability to read known words aloud, but severe deficits in pronouncing nonwords). As well, the lesioned patients show greater difficulty in response to low-frequency words with low spelling-sound consistency than to low-frequency, high-consistency words, consistent with previous results.[46]

The visual word form area (VWFA) is a left-lateralized inferotemporal area of the fusiform cortex that shows activation in response to visual word stimuli, and can be identified in nearly any literate person. Its activation is specific to linguistic input, and is not contingent upon spatial location, size, font, or case of presented words.[47] Activation also does not vary depending on stimulus presentation to the right or left visual field: visual word form information is directed in both cases to the VWFA.[48] Interestingly, the VWFA is equally activated by real words and by readable pseudowords, but shows less activation with visually similar consonant strings containing illegal letter combinations. Its response pattern is thus constrained by the orthographic rules of the reader's native language.[47] The VWFA is also the likely source of a known event-related potential (ERP) component, a sharp negative electrical spike over left inferior temporal electrodes at 180-200 milliseconds after word stimulus presentation.[48]

Chapter Summary

[edit | edit source]

The ability to comprehend meaning in text is dependent upon many different cognitive and physical abilities that develop in complexity over time. Young readers develop the association between words and meanings through stages that gradually prioritize visual word features, phonological cues, and direct links between spelling and meaning. The skill and ability of a mature reader depends not only on the learning process, but also their own early phonological awareness and the consistency of their native language.

Reading can be modeled in many ways, but the dual-route cascade model and connectionist model remain two of the most intriguing theories. Both have unique advantages, and use contrasting methods to successfully model word identification, reading aloud, and various forms of dyslexia. Such theories model cognitive processes, but physical correlates of online processing have also been studied, including eye movements, fixation patterns, and the span of perception during reading. Specific neural correlates have also been found for reading processes: the frontal operculum and visual word form area are consistently activated in response to linguistic stimuli.

Literacy is a relatively new skill in evolutionary time, but is of paramount importance in most modern societies, built as they are around written communication. As well, increased reading skill is correlated with changed perception of how words are built, and with vast improvement on tests of grammatical ability and phonemic segmentation. Thus, the ability to read appears to shape the reader’s relationship with not only the world around them, but with their own native language as well.

Learning Exercise

[edit | edit source]Please continue to this page for review questions.

See also

[edit | edit source]References

[edit | edit source]- ↑ Chomsky, N. (1988). Language and problems of knowledge: the Managua lectures. Cambridge, Mass: MIT Press.

- ↑ Petitto, L. A., & Marentette, P. F. (2007). Babbling in the Manual Mode: Evidence for the Ontogeny of Language. Science, 251(5000), 1493-1496.

- ↑ 3.0 3.1 Jay, T. (2003). The Psychology of Language, Pearson Education, Upper Saddle River, New Jersey, USA.

- ↑ Olson, D. R. (1996). Towards a psychology of literacy: on the relations between speech and writing. Cognition, 60(1), 83-104.

- ↑ Morals, J., Aiegria, J., & Content, A. (1987). The relationships between segmental analysis and alphabetic literacy: An interactive view. Chiers de Psychologie Cognitive, 7, 415-438. Cited in Olson, 1996.

- ↑ Bertelson, P., de Gelder, B., Tfouni, L.V., & Morais, J. (1989). The metaphonological abilities of adult illiterates: New evidence of heterogeneity. European Journal of Cognitive Psychology, 1, 239-250. Cited in Olson, 1996.

- ↑ Read, C. (1971). Pre-school children's knowledge of English phonology. Harvard Educational Review, 4I(1), 1-34. Cited in Olson, 1996.

- ↑ Karanth, P., & Suchitra, M.G. (1993). Literacy acquisition and grammaticality judgments in children. In R.J. Scholes (Ed.), Literacy and language analysis. Hillsdale, NJ: Erlbaum.

- ↑ Scholes, R.J, (1993), Utterance acceptability criteria: A follow-up to Karanth and Suchitra. In R. Scholes (Ed.), Literacy and language analysis. Hiltsdale, NJ: Erlbaum.

- ↑ Mumtaz, S., & Humphreys, G. W. (2001). The effects of bilingualism on learning to read English: evidence from the contrast between Urdu-English bilingual and English monolingual children. Reading, 24(2).

- ↑ Beech, J. R. (2005). Ehri's model of phases of learning to read: a brief critique. Literacy, 28(1), 50-58.

- ↑ Acha, J., Laka, I., Perea, M., & Acha, J. (2010). Reading development in agglutinative languages: Evidence from beginning, intermediate and adult Basque readers. Journal of Experimental Child Psychology, 105(4), 359-375.

- ↑ Frith, U. (1985). Beneath the surface of developmental dyslexia. In K.E. Patterson, J.C. Marshall, & M. Coltheart (Eds.), Surface Dyslexia (pp. 301–330). London: Erlbaum.

- ↑ 14.0 14.1 Ehri, L. C. (1994). Development of the Ability to Read Words: Update. Reading, 1-35.

- ↑ Masonheimer, P.E., Drum, P.A., & Ehri, L.C. (1984). Does environmental print identification lead children into word reading? Journal of Reading Behavior, 16, 257–271. Cited in Ehri, 1994.

- ↑ 16.0 16.1 Ehri, L.C., & Wilce, L.S. (1987a). Does learning to spell help beginners learn to read words? Reading Research Quarterly, 18, 47–65. Cited in Ehri, 1994.

- ↑ Ehri, L.C. (1992). Reconceptualizing the development of sight word reading and its relationship to recoding. In P.B. Gough, L.C. Ehri, & R. Treiman (Eds.), Reading acquisition (pp. 107–143). Hillsdale, NJ: Erlbaum. Cited in Ehri, 1994.

- ↑ Gough, P.B., & Hillinger, M.L. (1980). Learning to read: An unnatural act. Bulletin of the Orton Society, 30, 180–196. Cited in Ehri, 1994.

- ↑ Monaghan, E.J. (1983, April). A four-year study of the acquisition of letter-sound correspondences. Paper presented at the meeting of the American Educational Research Association, Montreal, Quebec, Canada. Cited in Ehri, 1994.

- ↑ 20.0 20.1 Marsh, G., Friedman, M., Welch, V., & Desberg, P. (1981b). A cognitive-developmental theory of reading acquisition. In G.E. Mackinnon & T.G. Waller (Eds.), Reading research: Advances in theory and practice (Vol. 3, pp. 199–221). New York: Academic. Cited in Ehri, 1994.

- ↑ Share, D.L. (1992). Phonological recoding and self-teaching: Sine qua non of reading acquisition. Unpublished manuscript, University of Haifa, School of Education, Haifa, Israel. Cited in Ehri, 1994.

- ↑ 22.0 22.1 de Jong, P. F., & van der Leij, A. (1999). Specific contributions of phonological abilities to early reading acquisition: Results from a Dutch latent variable longitudinal study. Journal of Educational Psychology, 91(3), 450-476. doi: 10.1037//0022-0663.91.3.450.

- ↑ Wagner, R. K., & Torgesen, J. K. (1987). The nature of phonological processing and its causal role in the acquisition of reading skills. Psychological Bulletin, 101(2), 192-212.

- ↑ Liberman, I.Y., Rubin, H., Duques, S., and Carlisle, J. 1985. Linguistic abilities and spelling proficiency in kindergarteners and adult poor spellers. In Biobehavioral Measures of Dyslexia, eds. D.B. Gray and J.F. Kavanagh. Parkton, MD: York Press. Cited in Badian, N.A. (1995). Predicting reading ability over the long term: The changing roles of letter naming, phonological awareness and orthographic processing. Annals of Dyslexia, 45(1), 79-96.

- ↑ Reitsma, P., & Verhoeven, L. (1990). Acquisition of written Dutch: An introduction. In P. Reitsma & L. Verhoeven (Eds.), Acquisition of reading in Dutch (pp. 1-13). Dordrecht, the Netherlands: Foris Publications. Cited in de Jong, 1999

- ↑ Seymour, P. H. K., Aro, M., & Erskine, J. M. (2003). Foundation literacy acquisition in European orthographies. British Journal of Psychology, 94, 143 – 174. Cited in Aro, 2003.

- ↑ Aro M and Wimmer H. Learning to read: English in comparison to six more regular orthographies. Applied Psycholinguistics. 2003, 24(04):621-635.

- ↑ Seidenberg MS and McClelland JL. A distributed, developmental model of word recognition and naming. Psychological Review. 1989, 96:523–568.

- ↑ Perrya C, Zieglerb JC, and Zorzic M. Beyond single syllables: Large-scale modeling of reading aloud with the Connectionist Dual Process (CDP++) model. Cognitive Psychology. 2010, 61(2):106-151.

- ↑ Coltheart, M. Lexical access in simple reading tasks. In G. Underwood (Ed.), Strategies of information processing (pp. 151-216). New York: Academic Press. Cited in Jay, 2003.

- ↑ 31.0 31.1 Coltheart M, Rastle K, Perry C, Langdon R, and Ziegler J. DRC: a dual route cascaded model of visual word recognition and reading aloud. Psychological Review. 2001, 108(1):204-56.

- ↑ 32.0 32.1 Rayner, K. (1998). Eye movements in reading and information processing: 20 years of research. Psychological Bulletin, 124(3), 372-422.

- ↑ Graphic attributed to Hans-Werner34 at en.wikipedia

- ↑ Lévy-Schoen, A. (1981). Flexible and/or rigid control of oculomotor scanning behavior. In D. F. Fisher, R. A. Monty, & J. W. Senders (Eds.), Eye movements: Cognition and visual perception (pp. 299- 316). Hillsdale, NJ: Erlbaum. Cited in Rayner, 1998.

- ↑ Carpenter, P. A., & Just, M. A. (1983). What your eyes do while your mind is reading. In K. Rayner (Ed.), Eye movements in reading: Perceptual and language processes (pp. 275-307). New York: Academic Press. Cited in Rayner, 1998

- ↑ Rayner, K., & Duffy, S. A. (1988). On-line comprehension processes and eye movements in reading. In M. Daneman, G. E. MacKinnon, & T. G. Waller (Ms.), Reading research: Advances in theory and practice (pp. 13-66). New York: Academic Press. Cited in Rayner, 1998.

- ↑ Rayner, K., Sereno, S. C., & Raney, G. E. (1996). Eye movement control in reading: A comparison of two types of models. Journal of Experimental Psychology: Human Perception and Performance, 22, 1188- 1200. Cited in Rayner, 1998.

- ↑ Rayner, K., & Well, A. D. (1996). Effects of contextual constraint on eye movements in reading: A further examination. Psychonomic Bulletin & Review, 3, 504-509. Cited in Rayner, 1998.

- ↑ 39.0 39.1 Rayner, K., Well, A. D., & Pollatsek, A. (1980). Asymmetry of the effective visual field in reading. Perception & Psychophysics, 27, 537-544. Cited in Rayner, 1998.

- ↑ 40.0 40.1 Rayner, K., Well, A. D., Pollatsek, A., & Bertera, J.H. (1982). The availability of useful information to the right of fixation in reading. Perception & Psychophysics, 31, 537-550. Cited in Rayner, 1998.

- ↑ McConkie, G. W., & Zola, D., (1987). Visual attention during eye fixations while reading. In M. Coltheart (Ed.), Attention and performance (Vol. 12, pp. 385-401). London: Erlbaum. Cited in Rayner, 1998.

- ↑ O'Regan, J. K., & Levy-Schoen, A. (1987). Eye movement strategy and tactics in word recognition and reading. In M. Coltheart (Ed.), Attention and performance: Vol. 12. The psychology of reading (pp. 363- 383). Hillsdale, NJ: Erlbaum. Cited in Rayner, 1998.

- ↑ Hyönä, J., Lorch, R. F., & Kaakinen, J. K. (2002). Individual Differences in Reading to Summarize Expository Text : Evidence From Eye Fixation Patterns. Journal of Educational Psychology, 94(1), 44 -55.

- ↑ http://commons.wikimedia.org/wiki/File:Ba44.png

- ↑ http://commons.wikimedia.org/wiki/File:Brodmann_area_45.png

- ↑ 46.0 46.1 46.2 Fiez JA, Tranel D, Seager-Frerichs D, and Damasio H. Specific reading and phonological processing deficits are associated with damage to the left frontal operculum. Cortex, 2006. 42:624-643.

- ↑ 47.0 47.1 Cohen L, Lehéricy S, Chochon F, Lemer C, Rivaud S, and Dehaene S. Language-specific tuning of visual cortex? Functional properties of the Visual Word Form Area. Brain. 2002, 125(5):1054-69.

- ↑ 48.0 48.1 Cohen L, Dehaene S, Naccache L, Lehéricy S, Dehaene-Lambertz G, Hénaff M, and Michel F. The visual word form area: Spatial and temporal characterization of an initial stage of reading in normal subjects and posterior split-brain patients. Brain. 2000, 123(2):291-307.