Statistics/Introduction

Examples

[edit | edit source]- 46% of people polled enjoy vanilla, while 54% prefer chocolate (+/-4% margin of error).

- A school's graduation rate has increased by 2%.

- A couple has 4 boys, and they are pregnant again: what is their chance of having another boy?

- 88% of people questioned feel that it is humane to put stray animals to sleep.

These are basic examples of statistics we see every day, but do we really understand what they mean? With the study of statistics, these 'facts' that we hear every day can hopefully become a little more clear.

Introduction

[edit | edit source]Statistics is permeated by probability. An understanding of basic probability is critical for the understanding of the basic mathematical underpinning of statistics. Strictly speaking the word 'statistics' means one or more measures describing the characteristics of a population. We use the term here in a more idiomatic sense to mean everything to do with sampling and the establishment of population measures.

Most statistical procedures use probability to make a statement about the relationship between the independent variables and the dependent variables. Typically, the question one attempts to answer using statistics is that there is a relationship between two variables. To demonstrate that there is a relationship the experimenter must show that when one variable changes the second variable changes and that the amount of change is more than would be likely from mere chance alone.

There are two ways to figure the probability of an event. The first is to do a mathematical calculation to determine how often the event can happen. The second is to observe how often the event happens by counting the number of times the event could happen and also counting the number of times the event actually does happen.

The use of a mathematical calculation is when a person can say that the chance of the event of rolling a one on a six sided dice is one in six. The probability is figured by figuring the number of ways the event can happen and divide that number by the total number of possible outcomes. Another example is in a well shuffled deck of cards, what is the probability of the event of drawing a three. The answer is four in fifty two since there are four cards numbered three and there are a total of fifty two cards in a deck. The chance of the event of drawing a card in the suite of diamonds is thirteen in fifty two (there are thirteen cards of each of the four suites). The chance of the event of drawing the three of diamonds is one in fifty two.

Sometimes, the size of the total event space, the number of different possible events, is not known. In that case, you will need to observe the event system and count the number of times the event actually happens versus the number of times it could happen but doesn't.

For instance, a warranty for a coffee maker is a probability statement. The manufacturer calculates that the probability the coffee maker will stop working before the warranty period ends is low. The way such a warranty is calculated involves testing the coffee maker to calculate how long the typical coffee maker continues to function. Then the manufacturer uses this calculation to specify a warranty period for the device. The actual calculation of the coffee maker's life span is made by testing coffee makers and the parts that make up a coffee maker and then using probability to calculate the warranty period.

Experiments, Outcomes and Events

[edit | edit source]The easiest way to think of probability is in terms of experiments and their potential outcomes. Many examples can be drawn from everyday experience: On the drive home from work, you can encounter a flat tire, or have an uneventful drive; the outcome of an election can include either a win by candidate A, B, or C, or a runoff.

Definition: The entire collection of possible outcomes from an experiment is termed the sample space, indicated as (Omega)

The simplest (albeit uninteresting) example would be an experiment with only one possible outcome, say . From elementary set theory, we can express the sample space as follows:

A more interesting example is the result of rolling a six sided dice. The sample space for this experiment is:

We may be interested in events in an experiment.

Definition: An event is some subset of outcomes from the sample space

In the dice example, events of interest might include

a) the outcome is an even number

b) the outcome is less than three

These events can be expressed in terms of the possible outcomes from the experiment:

a) :

b) :

We can borrow definitions from set theory to express events in terms of outcomes. Here is a refresher of some terminology, and some new terms that will be important later:

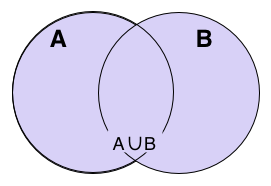

represents the Union of two events

represents the Intersection of two events

represents the complement of an event. For instance, "the outcome is an even number" is the complement of "the outcome is an odd number" in the dice example.

represents difference, that is, but not . For example, we may be interested in the event of drawing the queen of spades from a deck of cards. This can be expressed as the event of drawing a queen, but not drawing a queen of hearts, diamonds or clubs.

or represent an impossible event

represents a certain event

and are called disjoint events if

Probability

[edit | edit source]Now that we know what events are, we should think a bit about a way to express the likelihood of an event occurring. The classical definition of probability comes from the following. If we can perform our experiment over and over in a way that is repeatable, we can count the number of times that the experiment gives rise to event . We also keep track of the number of times that we perform the same experiment. If we repeat the experiment a large enough number of times, we can express the probability of event as follows:

where is the number of times event occurred, and is the number of times the experiment was repeated. Therefore the equation can be read as "the probability of event equals the number of times event occurs divided by the number of times the experiment was repeated (or the number of times event could have occurred)." As approaches infinity, the fraction above approaches the true probability of the event . The value of is clearly between 0 and 1. If our event is the certain event , then for each time we perform the experiment, the event is observed; and . If our event is the impossible event , we know and .

If and are disjoint events, then whenever event is observed, then it is impossible for event to be observed simultaneously. Therefore the number of times events union occurs are equal to the number of times event occurred plus the number of times occurs. This can be expressed as:

Given our definition of probability, we can arrive at the following:

At this point it's worth remembering that not all events are disjoint events. For events that are not disjoint, we end up with the following probability definition.

How can we see this from example? Well, let's consider drawing from a deck of cards. I'll define two events: "drawing a Queen", and "drawing a Spade". It is immediately clear that these are not disjoint events, because you can draw a queen that is also a spade. There are four queens in the deck, so if we perform the experiment of drawing a card, putting it back in the deck and shuffling (what statisticians refer to as sampling with replacement, we will end up with a probability of for a queen draw. By the same argument, we obtain a probability for drawing a spade as . The expression here can be translated as "the chance of drawing a queen or a spade". If we incorrectly assume that for this case , we can simply add our probabilities together for "the chance of drawing a queen or a spade" as . If we were to gather some data experimentally, we would find that our results would differ from the prediction -- the probability observed would be slightly less than . Why? Because we're counting the queen of spades twice in our expression, once as a spade, and again as a queen. We need to count it only once, as it can only be drawn with probability of . Still confused?

Proof: If and are not disjoint, we have to avoid the double counting problem by exactly specifying their union.

so

A and B \ A are disjoint sets. We can then use the definition of disjoint events from above to express our desired result:

We also know that

so

Whew! Our first proof. I hope that wasn't too dry.

Conditional Probability

[edit | edit source]Many events are conditional on the occurrence of other events. Sometimes this coupling is weak. One event may become more or less probable depending on our knowledge that another event has occurred. For instance, the probability that your friends and relatives will call asking for money is likely to be higher if you win the lottery. In my case, I don't think this probability would change.

Let's get formal for a second and remember our original definition of probability.

Consider an additional event , and a situation where we are only interested in the probability of the occurrence of when occurs. A way at this probability is to perform a set of experiments (trials) and only record our results when the event occurs. In other words

We can divide through on top and bottom by the total number of trials to get . We define this as 'conditional probability':

which when spoken, takes the sound "probability of given ."

Bayes' Law

[edit | edit source]An important theorem in statistics is Bayes' Law, which states that if we segment the probability space into disjoint , then

As a proof, first note that since are disjoint then

The theorem follows then by substituting into the conditional probability inequality

For a more detailed explanation, see http://en.wikipedia.org/wiki/Bayes'_theorem

Independence

[edit | edit source]Two events and are called independent if the occurrence of one has absolutely no effect on the probability of the occurrence of the other. Mathematically, this is expressed as:

.

Random Variables

[edit | edit source]It's usually possible to represent the outcome of experiments in terms of integers or real numbers. For instance, in the case of conducting a poll, it becomes a little cumbersome to present the outcomes of each individual respondant. Let's say we poll ten people for their voting preferences (Republican - R, or Democrat - D) in two different electorial districts. Our results might look like this:

and

But we're probably only interested in the overall breakdown in voting preference for each district. If we assign an integer value to each outcome, say 0 for Democrat and 1 for Republican, we can obtain a concise summary of voting preference by district simply by adding the results together.

Discrete and Continuous Random Variables

[edit | edit source]There are two important subclasses of random variables: discrete random variable (DRV) and continuous random variable (CRV). Discrete random variables take only countably many values. It means that we can list the set of all possible values that a discrete random variable can take, or in other words, the number of possible values in the set that the variable can take is finite. If the possible values that a DRV X can take are a0,a1,a2,...an, the probability that X takes each is p0=P(X=a0), p1=P(X=a1), p2=P(X=a2),...pn=P(X=an). All these probabilites are greater than or equal zero.

For continuous random variables, we cannot list all possible values that a continuous variable can take because the number of values it can take is extremely large. It means that there is no use in calculating the probability of each value separately because the probability that the variable takes a particular value is extremely small and can be considered zero: P(X=x)=0.

Distribution Functions

[edit | edit source]show that P(A-B)=P(A)-P(B)

Expectation Values

[edit | edit source]See also

[edit | edit source]- Topic:Statistics

- Introduction to research

- Statistical Economics

- Topic:Actuarial mathematics

- Introduction to Likelihood Theory

- Introduction to Classical Statistics

- Wikiversity:Statistics 202

- Introduction to probability and statistics

- Bayesian Statistics

- Statistics for Business Decisions

- Wikiversity:Statistics