OpenRefine to Wikibase: Data Upload Pipeline

Guidelines by Lozana Rossenova and Lucia Sohmen

This guidelines document was prepared at TIB’s Open Science Lab (OSL) alongside the development of an MVP (minimum viable product) for the enrichment of 3D media files with semantic metadata within the context of Task Area 1: Data Capture and Enrichment of the NFDI4Culture consortium. The MVP builds on several existing FOSS tools:

- OpenRefine, a data cleaning, reconciliation and batch upload tool;

- Wikibase, a suite of services developed by Wikimedia Germany; it combines the ability to handle large volumes of data points within a linked open data environment, which includes sophisticated data querying and extraction services via a dedicated SPARQL endpoint;

- Kompakkt, a browser-based open-source 3D- and multimedia viewer Kompakkt with built-in collaborative annotation features.

The integrated suite of tools follows FAIR principles and facilitates linking 3D-objects and annotations, and their cultural context (including historical people and places, geo-location and capture-technology metadata), to the broader semantic web and various national and international authority records (GND, VIAF and more).

This guide focuses on the first two tools part of the toolchain: OpenRefine and Wikibase. The following sections present a complete workflow: from starting to collect and structure data in spreadsheets, to transforming and reconciling data via OpenRefine, and finally to reviewing the different options for uploading your data to a Wikibase instance.

Data collection

[edit | edit source]You should start thinking about how your data should be prepared for a linked data environment while you are collecting it. This can save redundant work later on when you are transforming and preparing your data for upload. A common format for data collection is a tabular or spreadsheet format – either prepared in MS-Excel, or a different software application that allows for CSV (comma-separated values) output. However, if the data you want to work with is already stored in an existing content management system or data repository, you will have to extract this data in a format supported by OpenRefine. Supported formats include: TSV, CSV, *SV, Excel (.xls and .xlsx), JSON, XML, RDF as XML, and Google Data documents.

Preparing your data: When collecting and preparing data to be uploaded to a machine-readable database, there are several principles that are important to keep in mind.

Entity simplicity

[edit | edit source]To take maximum advantage of the affordances of the semantic environment of Wikibase, entities in your dataset should be kept relatively simple. Entities can refer to specific people, organisations, objects, places, events, and more. The entity serves as the hook that can be queried for. The richness of connections across entities in your dataset creates the conditions for useful querying. Highly specific details that are relevant to only one entity in the dataset may be less useful to record as part of the machine-readable dataset. However, every mention of an entity could be referenced to a particular textual narrative source, where richer detail may be provided.

Thinking in triples

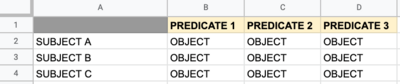

[edit | edit source]If you have been collecting data in spreadsheets or have exported data from an existing database in spreadsheet format, you will notice that the spreadsheet is a flat table without the capacity to represent rich network connections. However, the rows and columns of a spreadsheet can be reimagined as triple relationships in order to make the data modeling and eventual data upload process easier.[1] Semantic triples are the backbone of Linked Open Data environments. A triple is a data statements that consists of three parts: a subject, a predicate, and an object. Subjects are entities in your dataset. Predicates are the semantic links that connect an entity to another relevant entity, or a different piece of data, such as a date, a media file, a URL address, and more.

Thinking in triples while collecting and preparing your dataset means that the data you collect can already be interpreted as a set of relations between a set of entities and a set of related values. If you are collecting data in a spreadsheet, the first column would serve as the primary ‘subject’ of the triple (also referred to as ‘item’ in Wikibase terms). The heading of each following column can serve as the ‘predicate’ or the link (also referred to as ‘property’ in Wikibase terms) in the triple. Finally, the value of each cell in these further columns can serve as the “object” to be linked to the subject (in Wikibase objects can be other items, plain text, or numerical or temporal data, among other data types). (See illustration below.)

[1] If the data you have exported from an existing database is structured in an XML or JSON file, see section 3. Data transformation and reconciliation for more information on how to render such files as spreadsheets in OpenRefine.

One entity per field

[edit | edit source]For both subjects and objects, each field, or cell, in the spreadsheet should only hold a single entity. For example, instead of listing all contributors involved in the production of a work of art in the same cell in a column for contributors; each contributor name should be listed in a separate cell, in an adjacent column, and the column header can be repeated as many times as necessary. Predicates and Objects can be repeated within and across subjects as many times as necessary. Only subjects (in this case, in column A) must remain unique within a given spreadsheet.

One sheet per entity type

[edit | edit source]The more heterogeneous the data in a spreadsheet, the more difficult data reconciliation and upload will be in later stages of the workflow, so keeping a particular type of entity in the same spreadsheet will make the process more efficient later. For example, separate spreadsheets should be used to collect data for people, artworks, events, etc. What counts as ‘subjects’ in one spreadsheet may become ‘objects’ in another.

Descriptions before data

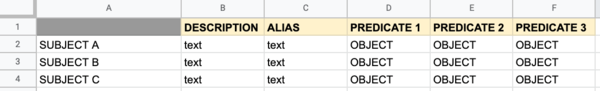

[edit | edit source]Lastly, there is a particularity to the Wikidata/ Wikibase linked data model that is worth noting, even if it is not relevant to other linked data workflows. Each entity in Wikidata/ Wikibase has several ways of being identified besides its title (or label). These include: a unique ID number (starting with the letter Q followed by a unique combination of digits), which is generated automatically upon the creation of the entity; a short description (250 characters), which is used to disambiguate across entities with the same title or label (e.g. the city of Paris in France vs the city of Paris in Texas, US); and finally a list of alternate titles or aliases (e.g. NYC for the city of New York). The unique ID number, in combination with the short description, allow for entities to have the exact same label (or title) and at the same time to be unique, clearly distinguishable entities in the linked data database.

If collecting data in a spreadsheet, the two columns following the first column defining the ‘subject’ of the triples, could be dedicated to the description and alias fields to be associated with each ‘subject’ entity during upload to Wikibase. This makes the upload process more efficient, and also allows one to quickly distinguish between entities bearing the same title. (See illustration below.)

Data modeling

[edit | edit source]Identifying core entity types and relations

[edit | edit source]Ideally, you will be thinking about your overarching data model already during data collection. Identifying your core entities and the kinds of relationships you want to represent in your dataset can be helpful while you are preparing your data in spreadsheets. You would want to create separate sheets for the core types of entities – e.g. people, artworks, events – that will need to be uploaded into Wikibase. These entity types will become classes of items in your Wikibase. Next you will also need to think about the other types of data you can collect around the core classes, e.g. for people you might collect date and place of birth, for artworks you might collect dates of creation, as well as creators. These can easily be expressed as triples if ‘date of birth’ or ‘place of birth’ are used as predicates, and associated values are used as objects in a triple statement in relation to a specific person. If you are not collecting data from scratch, but rather working with existing data that is stored in a relational database and can be exported in a format like CSV, XML, or JSON, then you can still go through the process of identifying types of entities and relations, and making sure that these are represented accordingly as columns / rows within separate projects per entity type once you load the data into OpenRefine.

Following existing schemas and vocabularies

[edit | edit source]Data modeling can be a complex process with a sharp learning curve. We recommend that data modeling is done as a collaborative process between domain specialists and data architects with experience in LOD environments, and Wikibase specifically. In addition, we recommend the (re)use of existing schemas and vocabularies as much as possible.

Sometimes this process can be straightforward, e.g. if you want to link a specific artwork to its entity type – the class ‘artwork’ – you can do so with the property ‘instance of’ which is widely used in the Wikibase community as equivalent to the ‘rdf:type’ property from the standard RDF schema. There are standard schemas and vocabularies, such as RDF, OWL, or SKOS, upon which to develop ontologies applicable across the LOD environment. And then there are standardized domain specific ontologies like CIDOC-CRM, as well as domain specific vocabularies for art and culture, such as Getty’s AT&T. While it is currently not possibly to directly use a standard schema or vocabulary in your Wikibase instance (something that the Wikibase community are working to change), you can still take guidance from standards with regards to what properties are typically associated with a specific domain, and what vocabularies can be used to constrain the values of a dataset. Following standards will make querying and reusing the data in the future easier.

Last but not least, if you intend to connect your data to Wikidata either for enrichment or federation purposes further down the line, it may be helpful to also look up relevant domain-specific WikiProject pages. This is where data models used within specific domains on Wikidata are documented. There is an index of the available pages related to cultural projects in Category:Cultural_WikiProjects.

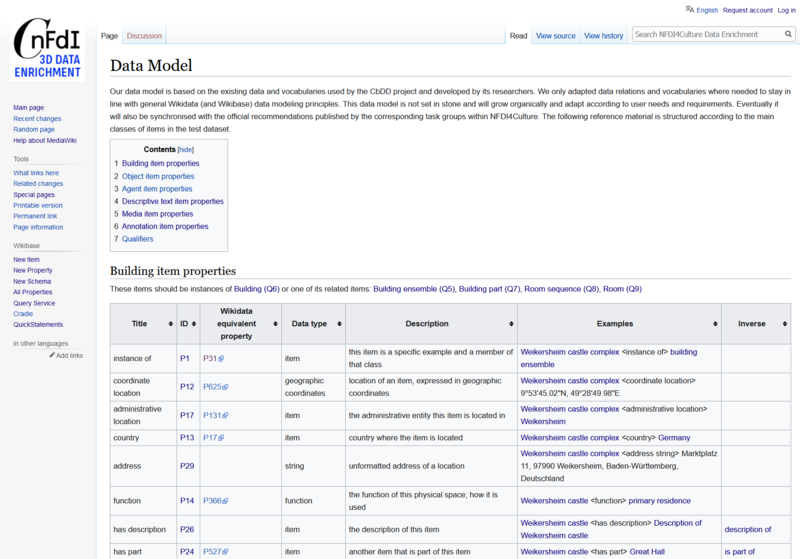

Documentation

[edit | edit source]There are many ways to document your data model, e.g. quick diagrams and sketches can be kept throughout the working process. Although the data modelling process in Wikibase can be fairly organic, with properties and classes growing over time, or changing if needed, it is recommended to have a working model before you start the data upload. Once you are confident you have a data model that meets the needs of your use case, you can also create a documentation page for it in your Wikibase. This page can follow the example of WikiProject pages, and include tables of all properties and data relations to be used in the Wikibase, divided according to relevant entity type, or class. See for example the data model for TIB’s MVP Wikibase (illustrated below):

Data transformation and reconciliation

[edit | edit source]The following guide is structured as a step-by-step tutorial for OpenRefine, however this is not meant to be a comprehensive resource. There are other extensive documents and online tutorials that cover more of the functionalities of OpenRefine. In this guide, we are focusing primarily on operations that help transform cultural heritage data so that it can be easily uploaded to Wikibase.

Install OpenRefine

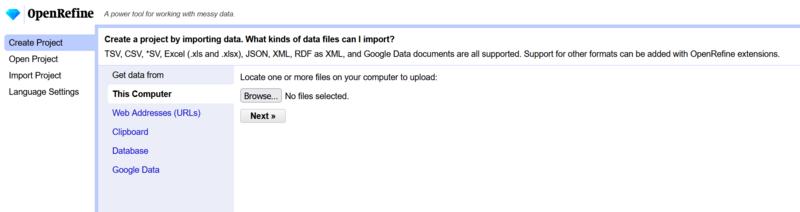

[edit | edit source]To begin with, download OpenRefine 3.5.0 here and follow the installation guidelines. Load data into OpenRefine

NB: You cannot add new rows to tabular data once you create a project in OpenRefine. If you need to add more rows to account for new data, you will need to create a new project.

1. When starting OpenRefine, you will be greeted with the “Create Project” page. You are presented with all the different options for data input. Choose one method and upload your data. Then click “Next”.

2. There are a few different options for creating your project:

2.1. You can specify what type of file you uploaded, if that was not detected automatically.

2.2. Make sure the encoding is correct.

2.3. For CSV files, you should specify how columns are separated and whether the file starts with the column names or the first row (in that case, tick “column names” and input the names you want).

2.4. Have a look if you need any of the other options

3. In the top right corner, click on “Create project”.

Transform to follow data model

[edit | edit source]1. Delete unnecessary columns:

1.1. If your data is very complex, it can be helpful to delete columns that are not needed.

1.1. If you are not sure what a column contains, you can click the arrow above the column → Facet → Text facet to get a quick overview of all values.

2. Transform cells and columns: There are many different ways to transform columns. The following will introduce some of them. Find more here.

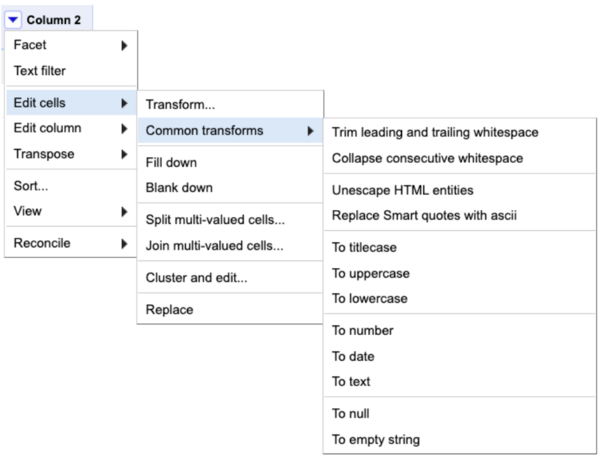

2.1. Click the arrow above the column → Edit cells → Common transforms. This will give some options for quick transformations that you do not need to configure.

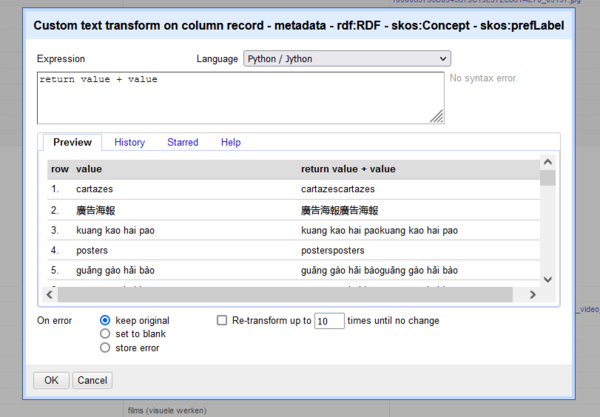

2.2. Click the arrow above the column → Edit cells → Transform... This will open a dialog window where you can use different languages to transform your values. Unfortunately, this can become very complex if you do not have programming experience. Clicking on the “Help” tab will give some information about expressions as well as a link to the manual. You can also refer to this cheatsheet for some quick transform functions written in GREL (that is OpenRefine’s custom version of regular expressions.

2.3. Click the arrow above the column → Transpose will give some options to reorder the table structure.

3. Add new columns:

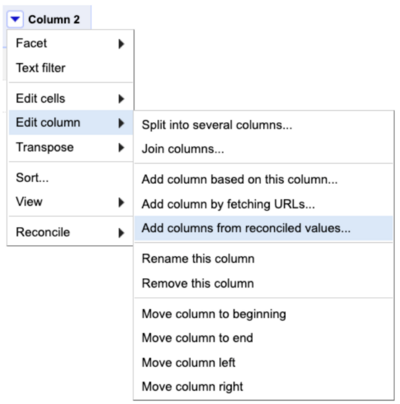

3.1. You can add new columns by transforming existing ones: Click the arrow above the column → Edit column → Add column based on this column. The process is similar to transformation, except the old column is not deleted.

3.2. You can also add new columns based on the data that is linked in your reconciled columns since the databases you are reconciling against usually store additional information (read more about reconciliation further below in this section). Simply click the arrow above the column → Edit column → Add columns from reconciled values → Choose the properties you need for your new columns → Click OK.

Configure client

[edit | edit source]The default OpenRefine client is configured to connect to Wikidata via the Wikidata extension. Since version 3.5, the OpenRefine client can connect to any arbitrary Wikibase via the same extension, provided that there is a public reconciliation endpoint for that instance. To connect to a Wikibase instance for purposes of reconciliation, and eventually upload, that instance needs to run an OpenRefine reconciliation service, and should have a manifest with the required configuration. You can find out more about these requirements in this presentation given at WikidataCon 2021. To start using OpenRefine with an existing Wikibase reconciliation service, as well as other available public reconciliation endpoints, follow the steps below.

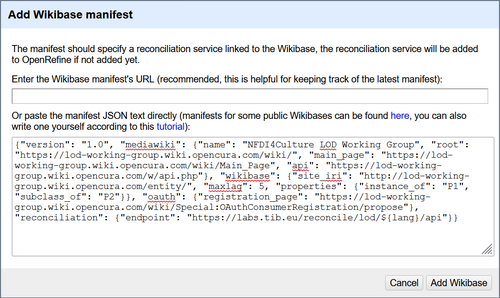

1. Add Wikibase manifest:

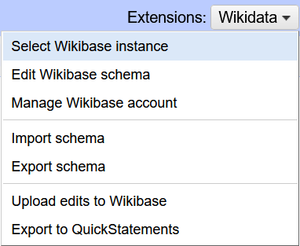

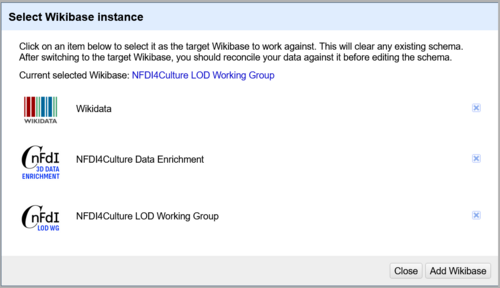

1.1. In the top right corner, click on Extensions: Wikidata → Select Wikibase instance → Add Wikibase → paste the manifest JSON → Add Wikibase.

1.2. If you already added this Wikibase, you just have to click on Extensions: Wikidata → Select Wikibase instance → select your Wikibase.

1.3. This should automatically add the reconciliation service for this Wikibase to the reconciliation algorithm settings in OpenRefine.

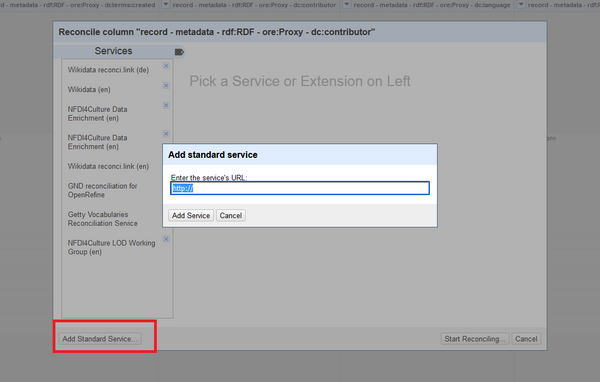

2. Add other reconciliation endpoints:

2.1. You need to find out the URL for your desired reconciliation endpoint. Here are some suggestions:

| Wikidata | https://wikidata.reconci.link/en/api (replace "en" with your language code) |

| GND | https://lobid.org/gnd/reconcile/ |

| Getty Vocabularies (ULAN, AAT, TGN) | http://services.getty.edu/vocab/reconcile/ |

| Any Sparql endpoint | https://github.com/stkenny/grefine-rdf-extension/ (does not work with 3.5.0 yet) |

Find more information about available reconciliation endpoints for OpenRefine here.

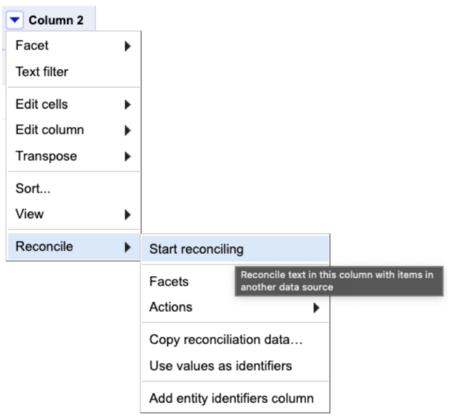

2.2. Above the column that you want to reconcile, click the down arrow → Reconcile → Start reconciling → Add standard service → paste the URL. You can now use this service for all future projects. (Note: If the reconciliation service for your own Wikibase wasn’t added here automatically in Step 1, you can add it manually just like all other reconciliation endpoints.)

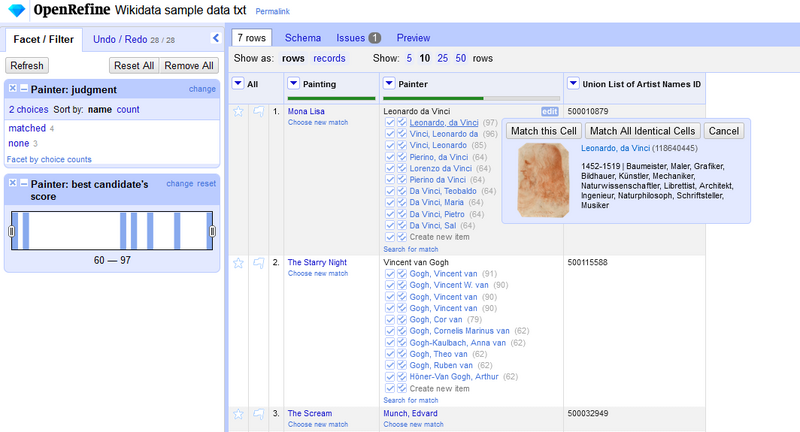

Reconcile with Wikibase

[edit | edit source]In order to upload data to Wikibase you need to reconcile all columns containing entities of the data type ‘item’ (this means entities that are represented as an item in Wikibase as opposed to values that are represented differently, for example as a string or URL). The reconciliation process attempts to find a match for these entities in the database, and if no match is found, it suggests that you make a new item instead. This ensures that you do not create duplicate items in your Wikibase. Note that you do not need to (and cannot) reconcile columns that contain data types such as dates, media items, geo coordinates, or plain strings of text.

1. To start this process, click the down arrow above the column that you want to reconcile → Reconcile → Start reconciling → choose the reconciliation endpoint of your Wikibase instance.

You can improve your results by having a look at the options for reconciliation, like adding another column to help distinguish between similar items or pre-choosing the type (class) of your item.

2. Once the reconciliation process is finished, you might still need to do some manual work. On the left side, you can see how many items were matched and how many were not. First, check some of the items that were automatically matched to see if the matching process works for your data. Second, check the items that were not matched. They might have some suggested candidates. You can confirm them by either clicking on the single tick (to confirm only for this item) or the double tick (to confirm for all similar items). If none of the matches are right, you can also click on “Search for match” to perform a search directly in Wikibase. This manual search process can help in cases where the reconciliation algorithm fails.

3. If the reconciliation process found no matches for some or all of your items, you have to create the items. If only a few items were unmatched, you can choose to create new items manually by either clicking on the single tick option to “Create new item” (to confirm only for this item) or the double tick (to confirm for all similar items). If all of your items were unmatched (or you use facets to select all the unmatched cells), you can also create new items in bulk. To do so you can click the down arrow above the column → Reconcile → Actions → “Create a new item for each cell” OR “Create one new item for similar cells” depending on your data.

4. If you are working with a dataset that already has some items uploaded in Wikibase and are subsequently adding more data related to these items, note that you can reconcile quicker if you do it directly against QIDs. If you have the QIDs for your items (either as whole URLs or just Q numbers), which you may pull via a SPARQL query for example, you can choose the following option to reconcile: click the down arrow above the column → Reconcile → Use values as identifiers.

Data enrichment

[edit | edit source]Reconciliation across various other endpoints, including Wikidata, can also be used to do effective data enrichment especially in relation to entities that may be common across multiple data sources such as famous people, locations, or technical concepts like software and file formats, among many others. The process to do this was already mentioned in the section on Transforming data and adding new columns in an OpenRefine project. Essentially, you can reconcile the same dataset against multiple endpoints and use data added in the form of additional columns from one data source, e.g. Wikidata, as new data to then be uploaded to another data repository, e.g. your Wikibase. For example, in the MVP project at TIB, we enriched data about 3D media file formats with data from Wikdiata and then uploaded that to our Wikibase instance.

Data upload

[edit | edit source]Upload using OpenRefine

[edit | edit source]Important tip: If you have items with multiple values for one property (i.e. one record consists of multiple rows), in order to upload data from all rows, please follow these steps: 1. Switch to row view. 2. Click the arrow above the column that contains your identifier → edit cells → Fill down. This will ensure that all values can be correctly connected to the corresponding items.

Upload process:

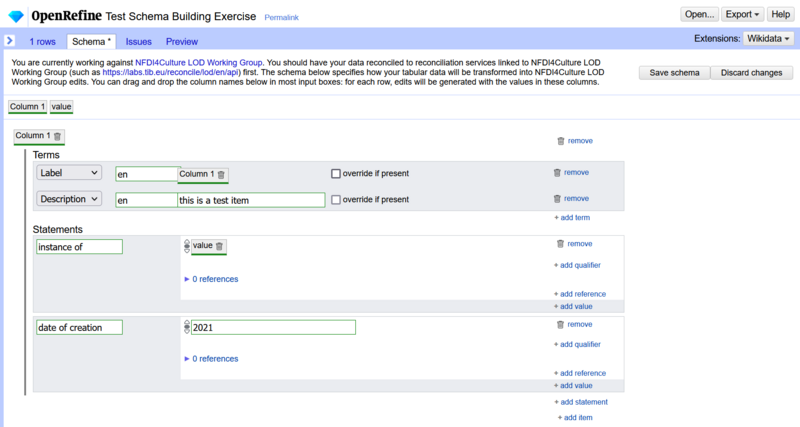

1. Connect your work sheet to the Wikibase instance of your choice: Click on Extensions: Wikidata in the top right corner → Select Wikibase instance → Add Wikibase (if your instance is not there already) → Select your Wikibase Now you need to create a schema to map out your items. After choosing the Wikibase instance, new tabs should appear in the top left. Select "Schema".

2. Now you need to create a schema to map out your items. After choosing the Wikibase instance, new tabs should appear in the top left. Select "Schema".

2.1. Choose the column that should act as the main identifier. This will probably be a column with IDs or names. Now click on “add item” and drag that column name to the empty space at the top. All items in this column will be described with the statements that you will add in the next step. (Note: This column has to be reconciled.) If you are creating new items, rather than just adding more data to existing items, you will need to also add at least Labels for these items, as well as optionally – Descriptions and Aliases. You can do this by choosing the option “add term”. You also have to specify a language for all the Label, Description or Alias values. In these value fields, you can drag a column with names or titles to populate the Label field, as well as other specially prepared columns (see section 1 of this guide) for Description or Alias. If the Description value is the same for all items you want to create, e.g. a painting by X artist, you can type that value as plain text directly into the field.

2.2. Now you can add statements: Click "add statement", fill in the property field and then drag the column with the corresponding values field. If the value is the same for all items, you can also type that in the field directly. Existing items in the Wikibase will be auto-suggested as possible values as soon as you start typing.

2.3. You may have to add more than one type of item. Example: You have a list of artists and the places they were born in. During the data modelling step, you decide that you want to create items for both the artists and the places and you add the corresponding properties accordingly. Both types of items can be added in the same schema. Just repeat Step a and b until you have added all types of items that you need. (Note: Building very large schemas slows down upload time and can cause errors, upload via OpenRefine works best when you first create items, and later add more statements to them.)

2.4. Once you are done, check out the “Issues” tab and the “Preview” tab. Now you can see what the finished data will look like and what you could improve.

Important tip: OpenRefine can add a lot of data quickly, but if there are any mistakes in your schema, you would have added a lot of false data and it may take a long time to clean up. Therefore, you should do a test upload of a few rows as a first step. You can do this by clicking on the star to the left of every row, faceting by star (via the option to facet from the down arrow on the first column of the spreadsheet), and then selecting only the starred rows. The “Preview” tab will tell you how many edits you are making.

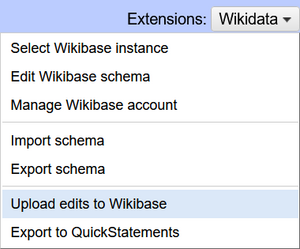

3. To upload, click Extensions: Wikidata → Upload edits to Wikibase. Here you will be prompted to log into Wikibase with your regular username and password. You will also need to add a quick summary of the edits you are making. This is for version control purposes. We recommend adding meaningful descriptions, e.g. “updating paintings’ metadata” or “adding new artists and artists’ data”, etc. (Ignore any warnings you get regarding log in passwords at this stage.)

4. Check the uploaded items. Go to [https: + your Wiki URL + /wiki/Special:Contributions/ + your user name] to see all your uploads.

5. If the test upload went well, you can now upload the rest of the items.

Other ways of uploading

[edit | edit source]There are some cases, when uploading viaOpenRefine might not be the right solution. For example, OpenRefine cannot yet deal with custom data types, such as local media or extended date type format (these are new Wikibase extensions, you can read more about them here). OpenRefine also cannot upload wikitext (i.e. long-form text that goes into the MediaWiki namespace of the database, rather than the structured data namespace, which is denoted by Item: and Property:). Last but not least, OpenRefine can only upload new data to Wikibase, it cannot reverse changes or override existing data statements.

However, there are several alternatives that address these issues with their own pros and cons.

1. QuickStatements: This is a separate data upload tool that is usually packaged with your Wikibase installation. Quickstatements does not have a user-friendly interface for data cleaning or preparation, but if you load up a specially prepared file following the Quickstatements syntax, you can upload data in bulk fairly efficiently. OpenRefine can help here as well. After you complete reconciliation and schema preparation, if you go to the Export button in the top right corner, you can export your generated edits as a QuickStatements file. You can then paste the contents of this file directly into the QuickStatements interface. Quickstatements tends to process uploads faster than OpenRefine particularly with large volumes of data. And unlike OpenRefine, Quickstatements allows you to also remove statements, not just add them, so you effectively override information, if you need to.

2. Python bots: If you are working with more special data types, need to add wikitext or want to delete a lot of data, you can also write your own Python bot to interact directly with the API. A Python library for this purpose exists, but it can get quite complex and the documentation quality can vary. If you choose this option, you can start here.

You can also try Wikidata Integrator, another python library for reading and writing to Wikidata/ Wikibase. It was created by members of the Wikidata and Wikibase communities who needed a higher integration with the Wikidata SPARQL endpoint to ensure greater data consistency (e.g. performing duplicate checks, etc), which wasn’t offered by Pywikibot.

Please note that this Data Upload Pipeline guide remains a living document. It is not meant to be exhaustive and is likely to change as we develop our MVP project further, and as new tools and features become available within the Wikibase and OpenRefine open source development ecosystem.

Acknowledgements: Some parts of Sections 1 and 2 (Data collection and data modelling) in this document are adapted from materials originally developed by Lozana Rossenova, in collaboration with Deena Engel, for this learning resource.