Psycholinguistics/Parsing

What is Parsing?[edit | edit source]

Parsing is the assignment of the words in a sentence to their appropriate linguistic categories to allow understanding of what is being conveyed by the speaker. It is not simply the assignment of words to simple diagrams or categories, but also involves evaluating the meaning of a sentence according to the rules of syntax drawn by inferences made from each word in the sentence. This evaluation of meaning is what makes parsing such a complex process. When speech or text is being parsed, each word in a sentence is examined and processed to contribute to the overall meaning and understanding of the sentence as a whole.

It occurs as the language is being processed, examining both the past and present stimuli to allow understanding of future concepts. When a sentence is read, the reader decides which categories the words belong in. These categories include basic grammatical components,(such as agent, proposition, patient) that are assigned based on what is inferred. These categories are very basic, simple enough that a computer can parse them if enough grammatical rules and roles are known.

However, parsing cannot just rely on simple grammatical rules as quite often, these thematic categorical components can be assigned to multiple categories or take on multiple meanings that drastically change the meaning of a sentence. This is part of what causes parsing to be so complex as it must go past the basic grammatical understanding of a word or a sentence and apply the correct meaning to it.

Parsing allows the reader to make these decisions, based on cues obtained from the words previously read in the sentence and the conclusions that can be drawn from these words. It takes the meaning drawn from what was read previously to allow understanding of what is currently being read. This parsing continues from word to word, sentence to sentence and paragraph to paragraph.

What Causes Parsing?[edit | edit source]

Parsing is driven by four main factors. Each contribute to parsing occurring correctly, however the exact weight of each of these forces' contribution is unknown. These factors are as follows:

Thematic roles[edit | edit source]

These are critical to parsing as they allow the most basic understanding of a sentence. This initial comprehension must be made before further and deeper inferences can be parsed. As a sentence is being spoken or read, roles are assigned to every noun phrase by using role assignors such as verbs. The verbs being assigned determine which roles need to be filled in a sentence, depending on which noun phrases are used. The primary decisions in parsing are based on the lowest "cost" in the sentence system in liking roles and noun phrases together. This means that the decisions regarding assignment to categories are based on the lowest amount of rules broken.

The thematic roles focus on the lexical information being presented. They rely on interpreting the words semantically in a sentence. It allows the linkage and coordination of both the semantic and discourse information as well as lexical and syntactic information (Christianson, 2001).

Semantic Features[edit | edit source]

Semantic features are associations that are directly affiliated with a particular word or idea. These associations may be conscious or subconscious. For example, a person thinks of an apple, they might also think of the color red, a worm or a tree. If a person thinks of a bird, then flying, feathers, and nest, may come to mind, as they are semantic features related to birds.

When parsing occurs of a sentence or phrase, the parser uses these semantic features of a word to draw inferences about the meaning of a sentence. This is crucial to understanding a sentence as sometimes examining thematic roles is not enough. This is especially true with common phrases or slang that doesn't always mean what the direct thematic roles suggest. An example of this would be, "Jane kicked the bucket." Parsing that sentence without knowing the correct semantic features would cause the reader to believe that Jane actually knocked over a bucket with her foot. However, knowing that this phrase is also semantically associated with dying, the reader can parse the sentence correctly.

Semantic features of sentences don't have to be so obvious however. Sometimes when a sentence is vague or could have multiple meanings, parsing relies on the associations with the words to derive the correct meaning. An example of this would be in the sentence, "Rover barked when someone entered the house." The semantic features in this sentence suggest that Rover is a dog. This is not stated in the sentence, thus Rover could be a cat, a seal or even a person. However due to these semantic features (in this case, the pronoun and the use of the word barking), the reader can correctly infer that Rover is a dog.

Knowing the overall meaning and associations in a sentence allows the cotent to be understood much faster, thus decreasing the time it takes to parse a sentence. However, sometimes errors can be made because of relying on semantics too much during parsing of a sentence, causing the wrong inferences to be drawn. An example of this is a long time riddle: "A plane crashes on the border between Canada and the United States. Where do they bury the survivors?" The semantics associated with a plane crash and burying suggest that victims should be buried. This leads to the wrong meaning being derived and the parsing of the sentence to be incorrect. However, even with these errors, relying on the semantic features of a sentence allows parsing to be completed much more efficiently.

Probabilities[edit | edit source]

The probability of a particular meaning of a word being associated with a particular sentence directly drives parsing. When parsing is occurring, assumptions are made about the meaning of what is being parsed based on the probability of that meaning most likely to occur.

Probabilities are important when a sentence is ambiguous. For example, "Visiting relatives can be such a nuisance." If the sentence before or after that sentence was, "We hate the long drive," then it can be assumed that the primary sentence meant going to visit one's relatives rather then the relatives visiting the speaker. This assumption is based on the probability of what the speaker means. It is no more then an educated guess based on the information presented.

Syntactic Phrase Structure[edit | edit source]

This knowledge of a concrete syntactic phrase structure is critical for parsing to occur. Having a solid knowledge of the rules of grammar and an unwillingness to deviate from these rules cause errors in parsing.

Serial and Parallel Processing[edit | edit source]

There are two main types of sentence processing that take allow parsing to occur. There has been much debate in the literature regarding which of these types or even if a combination of these types is the definitive strategy for parsing. There has been no solid conclusions on this however (Gibson, 2000; Ashcraft, 2006).

The two types of processing are as follows.

Serial Processing[edit | edit source]

This is when the parser commits to only one syntactic structure at a time. The reader uses one structure, then, upon finding it is inaccurate, will try another solution

Parallel Processing[edit | edit source]

This is when multiple structures are possibilities of sentence meaning are processed at once.

Chomsky's Transformational Grammar[edit | edit source]

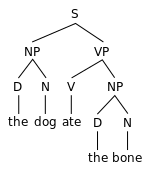

The basis for Chomsky's theory of grammar is that words can be combined to create meaningful phrases, called phrase structure grammar. Parsing is the process of dividing this. He suggests that sentences have both a simple and a deep structure. The deep structure, which is the meaning of a sentence, is the most meaningful and abstract level of representation of a sentence. Parsing is critical to determining this deep structure.

Chomsky also created the tree diagrams that serve as a visual representation for how parsing occurs. For an example of a tree diagram, you can click Here.

Another example is illustrated below.

Parsing uses these strategies in top down and bottom up parsing. Top down, often called conceptually driven parsing, moves from the top of the tree diagram. It is guided by higher level previous knowledge, which in turn affects the lower level processes. Bottom up processing, or data-driven parsing, is when parsing decisions are made from the bottom of the tree diagram; it is when the parsing decisions are guided by only the features of the sentence itself, driven by the knowledge that is obtained at that given time. The parser starts with the most basic elements, then moves to the more complicated (Ashcraft, 2006).

Theories of Parsing[edit | edit source]

There are two main theories for parsing of the English language.

1) Garden Path Model: Minimal Attachment

2) Constraint Satisfaction Models

Garden Path Sentences[edit | edit source]

The Garden Path model is a dominant theory in about how people are able to parse words together to interpret the meaning of statements. The title of the theory is based on a metaphor about being led down the wrong path. In regards to psycholinguistics, a person can be led down the wrong path while reading a sentence when they make inaccurate assumptions about the context of the noun phrases. The reader is not aware that they are being lead down the wrong path. At the beginning of the sentence, or the path, the reader is under the impression that they are proceeding in the right direction with the syntactic structure and making the correct assumptions as they are reading. Then suddenly, new information presented later on in the sentence causes the reader to fall down the rabbit hole. This new information causes confusion because, up until that rabbit hole, the reader assumed they were correct in their perception of the garden path.

The assumption behind this theory is that the reader perceives the sentence as being set in only one context. There is a failure to perceive that there may be another context or way to interpret the sentence based on the noun phrases. The reader remains confident in their perceived judgement and assumes they are right. Garden path sentences create confusion as the reader's preconceived judgments are shattered (van Gompel et. al., 2006).

There are generally three alternative ways how a person could perceive a sentence:

- Assemble a structure for just one of the possible interpretations and ignore all others (like the garden path model)

- Take into consideration all of the possible interpretations for the noun phrase at the same time

- Complete a partial analysis, with minimal commitment to one perception, waiting to make a final decision until more information is obtained.

Principles that Guide the Garden Path Model[edit | edit source]

There are two principles of the Garden Path Model which explain how incorrect assignment of roles in a sentence can create confusion.

Late Closure[edit | edit source]

This parsing error is when the new words and phrases that are creating confusion to be attached to the already open phrase (a phrase that is already being processed).

An example of this type of error is: The horse raced past the barn fell (can also be written as: The horse that raced past the barn fell).

Minimal Attachment[edit | edit source]

This is when the reader uses the simplest strategy to help understand the sentence. It is a strategy of parsimony, where the simplest strategy is seen as being the most accurate, and therefore, the best. Minimal attachment causes each incoming word to be attached to the already existing structure.

Misconceptions Linger[edit | edit source]

According to the Garden Path Model, the initial mistakes in reading and interpreting the sentence affect the inferences that the reader makes about the sentence as a whole. The reader does realize that an error has occurred in the interpretation of the sentence. Research by Christianson et. al. (2001), suggests that the initial interpretation readers have at the beginning of the sentence remains consistent despite being proved wrong and causing confusion to the reader.

These lingering misconceptions are a result of partial reanalysis. They are dependent on variables such as the length of ambiguous problem region, the actual plausibility (whether the sentence makes sense to the reader) and whether or not sentence is a garden path sentence.

The conclusions of this research state that the reader, instead of coming to a concrete, ideal solution, creates a "good enough" interpretation of the ambiguous sentence. This means that a reader's understanding does not focus on establishing a conclusion that fits perfectly to a sentence structure. Instead, the reader stops when they have reached a conclusion that seems to make sense with the processing and deems further processing of the sentence unnecessary (Christianson et. al., 2001).

The "Good Enough" Theory[edit | edit source]

Fernanda Ferreiand colleagues from the University of Edinburgh(2002) are a few of the primary researchers behind the "good enough" theory of parsing garden path sentences. Since 2002, there has been increased in research in support of this theory.

Research by Barton & Sandford (1993) states that processing of sentences is relatively shallow. A reader will make an assumption and fail to interpret it until further processing of that information is necessary. For example, consider the sentence, "Andrea and Amie saved $100." Generally, when a sentence such as this is ambiguous, the reader will not question or draw conclusions about it until these conclusions are demanded (Did they each save $100, or did they save $100 in total?). The reader operates under the "good enough" theory until deeper processing of the true meaning is required.

Challenges to the Garden Path Model[edit | edit source]

The Gardent Path Model is not the only model to explain Parsing. Listed below are some other models to explain how parsing may occur and other challenges to the Garden Path theory in general.

Constraint Satisfaction Model[edit | edit source]

This model states that the reader uses all of the available information at once when engaging in parsing of a sentence. This means that all lexical, syntactic, discourse and contextual information is taken into consideration simultaneously. According to this model, readers use all the information that they have, all the time. This is considered parallel processing, due to the multiple structures that are used.

Dependency Locality Theory[edit | edit source]

This theory argues that the reader prefers to attach information to local nodes rather than long distance nodes. This is based on amount of working memory required to fully understand a sentence. An increase in the amount of working memory needed to make sense of a sentence is correlated with an increased tendency for the reader to parse locally rather then to use long distance nodes.

The theory of locality is crucial to this concept, stating that the cost of integrating two elements together directly depends on the distance between the two (Gibson, 2000).

Competition Model[edit | edit source]

The majority of theories are based on how people parse English. In different languages, cues may be weighted differently depending on the language in regards to how much they are relied on to parse the language in question. For example, speakers of the English language rely heavily on word order, while Hungarian speakers do not.

Language processing is a series of competitions between lexical items, phonological forms and syntactic patterns. There may be other important processing items that are not considered in the Garden Path or any other model specifically because it is not used in the English language. More research has to be completed to compare other language parsing to determine if one theory can simply encompass all languages (MacWhinney & Bates, 1993).

Computers and Parsing[edit | edit source]

Although the vast majority of information presented has been regarding the natural English language, it must be noted that computers are now programmed to be able to correctly parse. First of all, lexicons are established, if the computer is parsing English, the lexicons would be the entire English dictionary. Grammar rules must then be established. When a computer encounters a sentence, it parses the sentence multiple times, creating tree diagrams until a viable meaning is found (Ashcraft, 2006).

However, there is a problem with ambiguity when a computer parses sentences. Many sentences in the English language are vague and rely on either probability or context to determine the meaning of a sentence. Computers can do this, based on knowledge of previous conversations but more mistakes are made.

A video describing parsing and computers can be found Here.

Resources[edit | edit source]

Ashcraft, M.H. & Klein, R.H. (2006). Cognition. Toronto: Pearson Canada.

Barton, S. B., & A. J. Sanford. 1993.A case study of anomaly detection: shallow semantic processing and cohesion establishment. Memory and Cognition 21.477–8

Christianson, K., Hollingworth, A., Halliwell, J. F., & Ferreira, F. (2001). Thematic roles assigned along the garden path linger. Cognitive Psychology, 42, 368–407

Ferreira, F., & Patson, N. D. (2007). The “good enough” approach to language comprehension. Language and Linguistics Compass, 1, 71–83.

Gibson, E. (2000). The dependency locality theory: A distance-based theory of linguistic complexity. In A. Marantz, Y. Miyashita, W. O'Neil, A. Marantz, Y. Miyashita, W. O'Neil (Eds.) , Image, language, brain: Papers from the first mind articulation project symposium (pp. 94-126). Cambridge, MA US: The MIT Press. Retrieved from EBSCOhost.

MacWhinney, B., & Bates, E. (1993). The crosslinguistic study of sentence processing. Journal of Child Language, 20, 463-471.

van Gompel, R. P. G., Pickering, M. J., Pearson, J., & Jacob, G. (2006). The activation of inappropriate analyses in garden-path sentences: Evidence from structural priming. Journal of Memory and Language, 55, 335–362.

http://www.psy.ed.ac.uk/people/fferreir/Fernanda/Ferreira_Patson_LLC_2007.pdf

Learning Exercise: Test Your Knowledge[edit | edit source]

Here is a video about parsing and the garden path model. You can view it HERE. Then, when you have watched it, complete the follow quiz questions about the topic of parsing and what you've learned in this chapter.

1. List and describe the two principles of the garden path model. How do they differ?

2. You are parsing a sentence into a tree structure in Psycholinguistics class one day. Your friend beside you gets a different answer then you do. Is it possible that you are both right?

a. How?

3. What type of sentence processing most likely occurs when a sentence is being parsed? Why?

4. The steps in the Garden Path model is a metaphor for what language component?

5. If a computer was parsing language, which of the four factors of Parsing do you think it would have the biggest problem with automating or doing as well as a human? Why?