Psycholinguistics/Gesture

GESTURE

|

Introduction[edit | edit source]

In the words of Edward Sapir [1], gestures are "an elaborate and secret code that is written nowhere, known to none, and understood by all." It is extraordinary that humans have such a complicated, underlying communication system parallel with verbal speech that is so taken for granted.

The study of speech production must not be limited to verbal production; after all, speech as a whole includes lexical, emotional, semantic, phonological, syntactic, and motoric/gestural aspects. [2] This non-verbal expression or gestural communication is largely unconscious, but makes up an important aspect of language production.

Are gesture and language really so interconnected? The evidence strongly points toward "yes"; though we may not notice our own actions, gesture appears to serve many functions, including more efficient and better communication of meaning, facilitated speech production and assisted language education. Even from a young age gesture is integral to language formation and its production. Clearly, the relationship between gesture and spoken language is more reciprocal and significant than one might intuitively think.

Types of Gestures[edit | edit source]

| Iconic | These gestures are recognized as hand signals that convey meaning in both physical form and manner that co-occur with verbal meaning.

Example: In this photo the man is describing a camera lens verbally, while simultaneously representing the size and shape of the lens with his hand. |

|

|---|---|---|

| Metaphoric | Metaphorics, or metaphorical gestures, are true to their name in that they are hand symbols that represent abstract ideas that are impossible to represent directly.

Example: A fist motion upwards and twisted to the left may stand for the idea of freedom. |

|

| Beat | Unlike iconic gestures, beats are hand movements that emphasize the spoken discourse itself, the function of speech, and convey minimal or no extra information.

Example: Up-and-down or back-and-forth hand movements that coincide with spoken clauses, breaks, or sentence ends. |

|

| Deictic | These are limited to pointing gestures that occur at the beginning of conversation or at the beginning of a new topic to reference a real or imagined target.

Example: Someone may point off into the distance to reference a place or time. |

|

| Cohesive | These gestures incorporate iconics as a way to physically connect different parts of a narrative.

Example: To connect a previous statement with a current one, someone may flick the hand backwards from the lap towards the ear. |

|

| Emblem | Emblems have direct meaning of their own and require no linguistic reinforcement. Typically they do not co-occur with speech as other gesture types do. Emblems tend to be culture specific, whereas co-speech gestures have interesting similarities across cultures.

Example: In North America the thumbs-up gesture is usually interpreted as "good" or "ready". In many cultures, however, it is considered an obscene gesture[3]. |

|

Roles of Gesture in Speech Production[edit | edit source]

Information Communication[edit | edit source]

There are two main hypotheses of what role gesture plays in speech, one of which is to aid in the communication of meaning or information and to allow the transfer of supplementary information other than that presented in linguistic output. [4] For example, when verbally describing how large a structure is, a person may also concurrently make hand motions that detail the shape. This hypothesis is also known as the Mutually Adaptive Modalities hypothesis, or MAM. [5]

Research suggests that gesturing is beneficial for communication. When one is faced with ambiguity in speech, possibly due to background noise, incomprehensible requests, or unintelligible speech, a listener tends to rely on gestural input to provide extra information. [6] In cases of when speech is comprehensible, studies have shown that participants still exhibit a more accurate understanding of instructions or narratives when speech is paired with gestures. [7]

Wu and Coulson (2007) [4] found that when participants were shown pictures congruent with both speech and gesture, smaller N300 and N400 Event-Related Potentials were elicited than when the pictures were only congruent with speech. In other words, pictures were easier to understand when gestures were combined with speech. This indicates that, corresponding with previous research, co-speech gestures allow for the better interpretation of spoken meaning.

In addition, co-speech gesture has been shown to facilitate the learning of new words in a foreign language [8], and young children may be able to understand the meaning of novel verbs when presented with gestural information and no speech. [9]

So it appears that gesture could not only relieve speech ambiguity and supply additional meaning, but it could also aid in the understanding of novel words in both familiar and foreign languages.

Lexical Access[edit | edit source]

The second hypothesis of why we use gesture is to facilitate lexical access, or in different terms, to aid working memory in word retrieval during speech. When we speak of gesture assisted speech it becomes necessary to mention lexical affiliates; these are defined as the word a specific gesture is hypothesized to facilitate. [10]

The reasoning behind the lexical access premise comes from studies that have found a link between cognitive tasks and motor movements. For instance, when told to imagine the shape of an inverted “S”, one may furrow their brow, change their gaze, and gesticulate. [9] Physical movement has also been found to occur during mental arithmetic [11], the consideration of visual presentations [12], and during the course of memory processes. [13] With this connection in mind, further research has demonstrated that motor movements may perform a supporting function for a few cognitive tasks [12].

During the course of speech it is well documented that gesturing occurs [14], and knowing that speech is a cognitive process, it stands to reason that there is another motive for co-speech gesture than just information communication; gesture may also assist lexical access. Krauss (1998) [10] proposed that because human memory represents knowledge using many different dimensions (e.g. visuo-spatial and motoric), and the same memory may be stored in multiple domains, gesticulation (which reflects spatio-dynamic features), may aid in the activation of other memory formats, therefore assisting the lexical access.

Wesp, Hesse, and Keutmann (2001) [15] also suggested that gestures help to maintain spatial representations in working memory; they found that when participants were asked to describe a painting from memory, they tended to gesture almost twice as much compared to when describing a painting in their immediate environment. In another study, this was further supported when participants exhibited more muscular activity in their dominant arm during memory retrieval of concrete and spatial words, i.e. those that can be more expressed in visual format. [16]

A study by Morrel-Samuels and Krauss (1992) [17] indicates that gestures occur extremely near to their lexical affiliates, and a gesture's duration is closely related to how long it takes a speaker to retrieve a word. Conjointly, it appears that more lexical gestures occur during spontaneous compared to rehearsed speech, indicating that gestures may be more prevalent in more hesitant speakers, and are performed to facilitate word retrieval. [18] Continuing this line of research, Rauscher, Krauss, and Chen (1996) [19] showed that when gesture was constrained, participants spoke more slowly, but only when the content of speech was spatial in nature; this supports the idea that gestures may maintain spatial representations and assist in memory retrieval.

These results have been further supported by Pine, Bird, and Kirk (2007) [20] when they found that, during a naming task, children could name more words correctly and complete more 'tip-of-the-tongue' tasks when allowed to gesture than when their movements were restricted. Altogether, this research supports the lexical access hypothesis, and suggests that gestures are also important for speech production as well as information communication.

These two hypotheses have the most support and evidence, but a few newer ideas include that gesture may work to decrease cognitive load during language processing [21], and aid conceptual processes before speech. [22]

It is possible that gesture fills all of these roles, contributing in an important, yet subtle way to spoken language.

Relationship of Gesture with Speech[edit | edit source]

Neuropsychological Evidence[edit | edit source]

We know that gesture most likely serves a function of assisting lexical retrieval and/or communicating information, but speech also has other inherent connections with gesture. According to the evidence, one of the primary roles of gesture is lexical retrieval, so it would follow that those with neuropsychological damage would have this word access affected. Correspondingly, research on stroke patients has provided evidence that those with anomic aphasia (deficits in word naming and retrieval abilities with normal comprehension), exhibit more gestures during a narration than typical patients or those with visuo-spatial deficits. [23] In addition, those with Wernicke's aphasia (deficits in comprehension with satisfactory levels of object naming and repetition) do not gesture as much as those with anomia or conduction aphasia (deficits in object naming and repetition with alright comprehension). [24]

Rose and Douglas (2001) [25] conducted a study in which they had patients with aphasia participate in a naming task; when instructed to point, visualize, and gesture, those with phonological access, storage, or encoding difficulties displayed significant improvement with iconic gesture. This research indicates that an underlying neurological connection between speech and gesture may be at work, and gestures may aid in the recovery of patients with aphasia due to their ability to promote lexical access.

Development[edit | edit source]

Gesture and spoken language appear to be highly interrelated in the brain; and this translates to the importance of gestural role in adult speech, but gesture also plays a central role during language development.

Gestures usually begin early, before speech, and are accordingly referred to as prelinguistic gestures; most infants begin gesturing between 6 and 10 months of age as a critical communication method. Another important step in language is learning the appropriate response to parental gestures, and this occurs around 9 and 12 months of age. [26]

Zammit and Schafer (2010) [27] found that the use of iconic gestures by mothers, controlling for maternal labeling and volubility, reliably predicted noun acquisition and comprehension. This indicates that joint attention between parents and infants serves to facilitate language acquisition. It appears that because gestures are synchronized with speech, it aids infants in deducing the meaning behind spoken words. [28]

Up until verbal communication takes over, infants too use their own individual gestures to facilitate communication with parents; as children grow older, gestures remain, but take on a more subtle role. [29] Gestures used by infants and children are referred to as deictic (pointing, references gestures) and representational (both referential and semantic content, e.g. waving goodbye or opening and closing the hand to refer to a fish. [30] Colletta, Pellenq, and le Guidetti (2010) [31] found a strong correlation between age, narrative complexity, and gesture use. This supports the hypothesis that as we age not only does our linguistic discourse grow more complex, but our co-speech gestures develop as well, from only deictic and representational to more complex gesticulation, in the context of narrative and social activity.

The link between cognition, language, and gestures is further expanded by other investigators, including Fenson and Ramsey (1980) [32], who found that along with the first two word combinations, two or more gestures combined into one functional movement occur near the same time, during Piaget's final stage of sensorimotor intelligence, around 20 months of age. Furthermore, significant correlations have been found between multi-word speech and multi-combinational gestures. This indicates that as speech progresses through development, so does gesticulation in a similar fashion.

So, gestures appear to be necessary in the development of language through parental input and social interaction, and as speech develops and becomes more complex throughout life, so does gesture.

Gesture - Inclusive Models[edit | edit source]

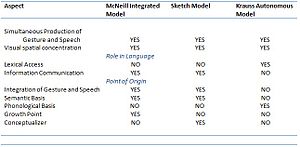

Comprehensive models of speech production incorporate speaking with co-speech gestures, in an attempt to understand language; here are the three main models:

The McNeill Integrated Model[edit | edit source]

In the McNeill integrated model, it has been proposed that gestures share a computational, or comprehension, stage with speech, thereby developing simultaneously as a part of the same underlying psychological processes to respond to external agents concurrently. [2] This psychological source of a synchronous verbal gestural unit is called a 'growth point'. [33] Additionally, because they occur together, gestures and speech most likely have the same or similar semantic information to communicate; this is especially true for iconic gestures. [2] The focus is placed on iconic gestures, because they are visuo-spatial in nature and the Integration model concentrates on spatial information. This hypothesis would predict that when communicating in a noisy room, and when spatial information is being communicated over the phone, more gestures (mostly iconic with the phone) would be used. [5][6]

McNeill (1985) [2] illustrated his model by comparing the gestures and speech of five different people on describing a narrative involving a character entering and climbing up inside a drainpipe. On inspection, not only was the recorded spoken language similar, but so were the iconic gestures:

- “...he tries going up inside of the drainpipe...” while the hand rises and points upward.

- “...he tries climbing up in the drainspout of the building...” while the hand rises and starts to point up.

- “...and he goes up through the pipe this time...” while the hand rises and the fingers form a basket.

- “...this time he tries to go up inside the raingutter...” while the hand points and rises quickly.

- “...as he tries climbing up the rain barrel...” while the hand flexes back and then upwards.

As these examples demonstrate, gestures occurred at the same point as speech, and described the same semantic information (spatial in nature). Furthermore, complex multi-combinational gestures tend to have pauses between semantic units which correspond with speech. This indicates that gestures mirror speech, and it is especially poignant since gestures, like language, are similar between speakers.

In this model, there are slight changes in meaning during co-speech gestures, and taken together with concurrent spoken word, a single complex meaning is derived from a lone cognitive growth point. [2] An implication of this model is that imagistic thinking, aided through gesture, plays a central role is speech and its conceptualization. [33]

The Sketch Model[edit | edit source]

The Sketch Model is a processing model for gesture and speech developed by Jan Peter de Ruiter (2006) [5]. It is based on the gesture-inclusive McNeill model of language, in that it incorporates the idea of simultaneous growth points (here referred to as a conceptualization stage) and the hypothesis of shared semantic information into a more specific representation.

This model attempts to account for evidence for growth points provided by McNeill by proposing a single cognitive ‘conceptualizer’ that synchronizes gesture with speech plans and subsequently leads to overt production of both at once. [5]

The Krauss Autonomous Model[edit | edit source]

The Krauss model differs from the previous ones in that it sees gesture and speech as non-integrated; in this way, gestures may serve to communicate some meaning, but their primary role is to facilitate lexical retrieval. [10] So this model focuses on the ‘lexical access’ role of gestures while the McNeill and Sketch models focus on the ‘information communication’ role.

The Krauss and McNeill models are similar in that they both assume gestures occur very close to their lexical affiliates and may share a common origin at some point; in addition, they both concentrate on the idea that gestures more so reflect spatio-dynamic, or visuo-spatial, features of speech. [10][2] Contrarily, this model assumes that there is no shared semantic conceptualization stage. This is based on evidence from tip-of-the-tongue (TOT) tasks like that of Frick-Horbury and Guttentag (1998) [34]; this study restricted the participant ability to gesture during TOT tasks and found that preventing gesticulation interfered with word retrieval. This has been further supported by others such as Pine, Bird, and Kirk (2007). [20] Retrieval failures in these TOT tasks were mostly phonological so it was inferred that not only do gestures and speech not share a conceptualization stage, gestures must reflect phonological more than semantic encoding.

It was concluded that instead of gesture and speech being related at a semantic level during a conceptualization stage, it is much more likely that the influence of gestures on speech is with their ability to aid word retrieval in a spatio-dynamic fashion during phonological encoding. [10] So in that way, speech and gesture diverge and run parallel to each other from the same point in working memory to meet at the overt production point. Some gestures may be intended for information communication purposes (such as emblematics), but most are solely produced for lexical retrieval.

Conclusion[edit | edit source]

Finally, research into language must include gesticulation in its repertoire; as one studies the role of gesticulation in speech it becomes more and more apparent of just how important it really is: gesture serves to help the development of language in children, aid communication of information, facilitate lexical access and possibly much more. In this, gesture acts in a subtle, yet complex manner to reinforce human communication and bring us closer together.

Learning Exercises[edit | edit source]

Quiz Yourself![edit | edit source]

Further Exercises[edit | edit source]1) For this exercise you will need a pencil, paper, and the help of a friend: describe the following video to them with your hands held behind your back and see how long it takes them to understand what you mean, and successfully replicate it on paper. Had you been able to gesture, how long do you suppose it would have taken for them to understand and draw it? Which role of gesture do you suppose this provides evidence for?

Have your observations supported this? Why do you think they have or have not?

Which linguistic model incorporating gesture could use this as evidence for their hypothesis, and why?

Design a hypothetical therapy to help improve the speech deficits of people with anomia. Which role of co-speech gesture does your therapy focus on? Why do you think it would work and what evidence is there that would support your therapy?

|

References[edit | edit source]

- ↑ Sapir, E. (1949). The unconscious patterning of behavior in society. In D. Mandelbaum (Ed.), Selected writing of Edward Sapir in language, culture and personality (pp. 544-559). Berkeley: University of California Press.

- ↑ 2.0 2.1 2.2 2.3 2.4 2.5 McNeill, D. (1985). So you think gestures are nonverbal?. Psychological Review, 92(3), 350-371. doi:10.1037/0033-295X.92.3.350

- ↑ Koerner, Brendan I. (March 28, 2003). "What Does a "Thumbs Up" Mean in Iraq?". Slate.

- ↑ 4.0 4.1 Wu, Y., & Coulson, S. (2007). How iconic gestures enhance communication: An ERP study. Brain and Language, 101(3), 234-245. doi:10.1016/j.bandl.2006.12.003

- ↑ 5.0 5.1 5.2 5.3 de Ruiter, J. (2006). Can gesticulation help aphasic people speak, or rather, communicate?. Advances in Speech Language Pathology, 8(2), 124-127.

- ↑ 6.0 6.1 Thompson, L. A., & Massaro, D. W. (1986). Evaluation and integration of speech and pointing gestures during referential understanding. Journal of Experimental Child Psychology, 42(1), 144–168.

- ↑ Singer, M. A., & Goldin-Meadow, S. (2005). Children learn when their teacher’s gestures and speech differ. Psychological Science, 16(2), 85–89.

- ↑ Kelly, S. D., McDevitt, T., & Esch, M. (2009). Brief training with co-speech gesture lends a hand to word learning in a foreign language. Language and Cognitive Processes, 24(2), 313-334. doi:10.1080/01690960802365567

- ↑ 9.0 9.1 Morsella, E., & Krauss, R. M. (2004). The role of gestures in spatial working memory and speech. The American Journal of Psychology, 117(3), 411-424. doi:10.2307/4149008

- ↑ 10.0 10.1 10.2 10.3 10.4 Krauss, R. M. (1998). Why do we gesture when we speak?. Current Directions in Psychological Science, 7(2), 54-60. doi:10.1111/1467-8721.ep13175642

- ↑ Graham, T. A. (1999). The role of gesture in children's learning to count. Journal of Experimental Child Psychology, 74, 333-355.

- ↑ 12.0 12.1 Spivey, M., & Geng, J. (2001). Oculomotor mechanisms activated by imagery and memory: Eye movements to absent objects. Psychological Research, 65, 235-241.

- ↑ Glenberg, A. M., Schroeder, J. L., & Robertson, D. A. (1998). Averting the gaze disengages the environment and facilitates remembering. Memory & Cognition, 26, 651-658.

- ↑ Krauss, R. M., Chen, Y., & Chawla, P. (1996). Nonverbal behavior and nonverbal communication: What do conversational hand gestures tell us? Advances in Experimental Social Psychology, 8, 389-450.

- ↑ Wesp, R., Hesse, J., Keutmann, D., & Wheaton, K. (2001). Gestures maintain spatial imagery. American Journal of Psychology, 1 14, 591-600.

- ↑ Morsella, E., & Krauss, R. M. (2005). Muscular Activity in the Arm during Lexical Retrieval: Implications for Gesture-Speech Theories. Journal of Psycholinguistic Research, 34(4), 415-427. doi:10.1007/s10936-005-6141-9

- ↑ Morrel-Samuels, P. & Krauss, R. M. (1992). Word familiarity predicts temporal asynchrony of hand gestures and speech. Journal of Experimental Psychology: Learning. Memory, and Cognition, 18, 615-623.

- ↑ Chawla, P., & Krauss, R. M. (1994). Gesture and speech in spontaneous and rehearsed narratives. Journal of Experimental Social Psychology, 30(6), 580-601. doi:10.1006/jesp.1994.1027

- ↑ Rauscher, F. H., Krauss, R. M., & Chen, Y. (1996). Gesture, speech, and lexical access; The role of lexical movements in speech production. Psychological Science. 7, 226-231.

- ↑ 20.0 20.1 Pine, K. J., Bird, H., & Kirk, E. (2007). The effects of prohibiting gestures on children's lexical retrieval ability. Developmental Science, 10(6), 747-754. doi:10.1111/j.1467-7687.2007.00610.x

- ↑ Goldin-Meadow, S., Nusbaum, H., Kelly, S. D., & Wagner, S. (2001). Explaining math: Gesture lightens the load. Psychological Science, 12, 516–522.

- ↑ Alibali, M. W., Kita, S., & Young, A. J. (2000). Gesture and the process of speech production: We think, therefore we gesture. Language and Cognitive Processes, 15, 593–613.

- ↑ Hadar, U., Burstein, A., Krauss, R.M., & Soroker, N. (1998), Ideational gestures and speech: A neurolinguistic investigation. Language and Cognitive Processes. 13, 59-76.

- ↑ Hadar, U., Wenkert-Olenik, D., Krauss, R. M., & Soroker, N. (1998). Gesture and the processing of speech; Neuropsychological evidence. Brain and Language, 62, 107-126.

- ↑ Rose, M., & Douglas, J. (2001). The differential facilitatory effects of gesture and visualisation processes on object naming in aphasia. Aphasiology, 15, 977 – 990.

- ↑ Masur, E. F. (1983). Gestural development dual-directional signalling and the transition to words. Journal of Psycholinguistic Research, 12, 93-109.

- ↑ Zammit, M., Schafer, G. (2010). Maternal label and gesture use affects acquisition of specific object names. Journal of Child Language, 38(1), 201 – 221.

- ↑ Zukow-Goldring, P. (1996). Sensitive caregiving fosters the comprehension of speech: When gestures speak louder than words. Early Development and Parenting, 5(4), 195 - 211.

- ↑ Messinger, D. S., & Fogel, A. (1998). Give and take: The development of conventional infant gestures. Merrill-Palmer Quarterly, 44, 566-590

- ↑ Acredolo, L., & Goodwyn, S. (1985). Symbolic gesturing in language development: A case study. Human Development, 28, 40-49.

- ↑ Colletta, J., Pellenq, C., & Guidetti, M. (2010). Age-related changes in co-speech gesture and narrative: Evidence from French children and adults. Speech Communication, 52(6), 565-576. doi:10.1016/j.specom.2010.02.009

- ↑ Fenson, L., & Ramsey, D. (1980). Decentration and integration of the child's play in the second year. Child Development, 51, 171-178.

- ↑ 33.0 33.1 McNeill, D., Duncan, S. D., Cole, J., Gallagher, S., & Bertenthal, B. (2008). Growth points from the very beginning. Interaction Studies: Social Behaviour and Communication in Biological and Artificial Systems, 9(1), 117-132. doi:10.1075/is.9.1.09mcn

- ↑ Frick-Horbury, D., & Guttentag, R. E. (1998).The effects of restricting hand gesture production on lexical retrieval and free recall. The American Journal of Psychology, 111(1), 43 - 63.